New Scientist reports: When you read this sentence to yourself, it’s likely that you hear the words in your head. Now, in what amounts to technological telepathy, others are on the verge of being able to hear your inner dialogue too. By peering inside the brain, it is possible to reconstruct speech from the activity that takes place when we hear someone talking.

Because this brain activity is thought to be similar whether we hear a sentence or think the same sentence, the discovery brings us a step closer to broadcasting our inner thoughts to the world without speaking. The implications are enormous – people made mute through paralysis or locked-in syndrome could regain their voice. It might even be possible to read someone’s mind.

Imagine a musician watching a piano being played with no sound, says Brian Pasley at the University of California, Berkeley. “If a pianist were watching a piano being played on TV with the sound off, they would still be able to work out what the music sounded like because they know what key plays what note,” Pasley says. His team has done something analogous with brain waves, matching neural areas to their corresponding noises.

How the brain converts speech into meaningful information is a bit of a puzzle. The basic idea is that sound activates sensory neurons, which then pass this information to different areas of the brain where various aspects of the sound are extracted and eventually perceived as language. Pasley and colleagues wondered whether they could identify where some of the most vital aspects of speech are extracted by the brain.

The team presented spoken words and sentences to 15 people having surgery for epilepsy or a brain tumour. Electrodes recorded neural activity from the surface of the superior and middle temporal gyri – an area of the brain near the ear that is involved in processing sound. From these recordings, Pasley’s team set about decoding which aspects of speech were related to what kind of brain activity. [Continue reading…]

Category Archives: Neuroscience

Profit vs. principle: the neurobiology of integrity

Wired Science reports: Let your better self rest assured: Dearly held values truly are sacred, and not merely cost-benefit analyses masquerading as nobel intent, concludes a new study on the neurobiology of moral decision-making. Such values are conceived differently, and occur in very different parts of the brain, than utilitarian decisions.

“Why do people do what they do?” said neuroscientist Greg Berns of Emory University. “Asked if they’d kill an innocent human being, most people would say no, but there can be two very different ways of coming to that answer. You could say it would hurt their family, that it would be bad because of the consequences. Or you could take the Ten Commandments view: You just don’t do it. It’s not even a question of going beyond.”

In a study published Jan. 23 in Philosophical Transactions of the Royal Society B, Berns and colleagues posed a series of value-based statements to 27 women and 16 men while using an fMRI machine to map their mental activity. The statements were not necessarily religious, but intended to cover a spectrum of values ranging from frivolous (“You enjoy all colors of M&Ms”) to ostensibly inviolate (“You think it is okay to sell a child”).

After answering, test participants were asked if they’d sign a document stating the opposite of their belief in exchange for a chance at winning up to $100 in cash. If so, they could keep both the money and the document; only their consciences would know.

According to Berns, this methodology was key. The conflict between utilitarian and duty-based moral motivations is a classic philosophical theme, with historical roots in the formulations of Jeremy Bentham and Immanuel Kant, and other researchers have studied it — but none, said Berns, had combined both brain imaging and a situation where moral compromise was realistically possible.

“Hypothetical vignettes are presented to people, and they’re asked, ‘How did you arrive at a decision?’ But it’s impossible to really know in a laboratory setting,” said Berns. “Signing your name to something for a price is meaningful. It’s getting into integrity. Even at $100, most all our test subjects put some things into categories they were willing to take money for, and others they wouldn’t.”

When test subjects agreed to sell out, their brains displayed common signatures of activity in regions previously linked to calculating utility. When they refused, activity was concentrated in other parts of their brains: the ventrolateral prefrontal cortex, which is known to be involved in processing and understanding abstract rules, and the right temporoparietal junction, which has been implicated in moral judgement.

Video: Daniel Wolpert — the real reason for brains

Video: How we read each other’s minds

The intricate circuitry revealed in new mapping of the neurons of touch

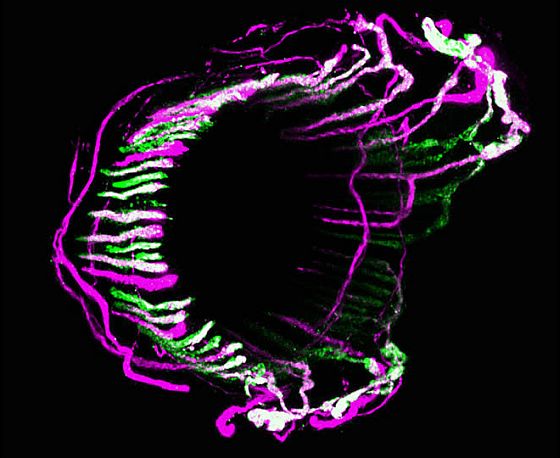

Guard hair palisade neural endings cell in a mouse.

Wired reports on a study [PDF] mapping tactile neurons, co-authored by Jeff Woodbury of the University of Wyoming: To untangle the wiring, Woodbury and other researchers led by neuroscientist David Ginty of Johns Hopkins University began with mouse nervous systems. They focused on a class of nerve endings called low-threshold mechanosensory receptors, or LTMRs, which are sensitive to the slightest of sensations: a mosquito’s footsteps, a hint of breeze.

The researchers identified genes active only in types of LTMRs at the base of ultra-sensitive hairs. Then, in mouse embryos, they tagged those genes with fluorescent proteins. The result: an engineered mouse strain illuminating the full, precise paths of LTMR cells.

Under a microscope, the researchers tracked three different types of LTMRs in never-before-seen detail, plus a fourth well-studied type. “This enabled us, for the first time, to nail down and tie specialized nerve endings to functions,” Woodbury said.

By following LTMRs all the way into ganglia and out the other side into the spinal cord, the researchers discovered a layering pattern that resembles the organization of neurons in the cerebral cortex — the outermost layer of the brain with major roles in consciousness, memory, attention and other roles.

With four of the two dozen different nerve ending types now traceable, the researchers are now working to tag the rest. Someday, perhaps, their technique could lead to complete neural maps of touch.

The power of networks: knowledge in an age of infinite interconnectedness

The divided brain

Neuroscience vs philosophy: Taking aim at free will

The leading science journal, Nature, reports: The experiment helped to change John-Dylan Haynes’s outlook on life. In 2007, Haynes, a neuroscientist at the Bernstein Center for Computational Neuroscience in Berlin, put people into a brain scanner in which a display screen flashed a succession of random letters. He told them to press a button with either their right or left index fingers whenever they felt the urge, and to remember the letter that was showing on the screen when they made the decision. The experiment used functional magnetic resonance imaging (fMRI) to reveal brain activity in real time as the volunteers chose to use their right or left hands. The results were quite a surprise.

“The first thought we had was ‘we have to check if this is real’,” says Haynes. “We came up with more sanity checks than I’ve ever seen in any other study before.”

The conscious decision to push the button was made about a second before the actual act, but the team discovered that a pattern of brain activity seemed to predict that decision by as many as seven seconds. Long before the subjects were even aware of making a choice, it seems, their brains had already decided.

As humans, we like to think that our decisions are under our conscious control — that we have free will. Philosophers have debated that concept for centuries, and now Haynes and other experimental neuroscientists are raising a new challenge. They argue that consciousness of a decision may be a mere biochemical afterthought, with no influence whatsoever on a person’s actions. According to this logic, they say, free will is an illusion. “We feel we choose, but we don’t,” says Patrick Haggard, a neuroscientist at University College London.

You may have thought you decided whether to have tea or coffee this morning, for example, but the decision may have been made long before you were aware of it. For Haynes, this is unsettling. “I’ll be very honest, I find it very difficult to deal with this,” he says. “How can I call a will ‘mine’ if I don’t even know when it occurred and what it has decided to do?”

Philosophers aren’t convinced that brain scans can demolish free will so easily. Some have questioned the neuroscientists’ results and interpretations, arguing that the researchers have not quite grasped the concept that they say they are debunking. Many more don’t engage with scientists at all. “Neuroscientists and philosophers talk past each other,” says Walter Glannon, a philosopher at the University of Calgary in Canada, who has interests in neuroscience, ethics and free will.

There are some signs that this is beginning to change. This month, a raft of projects will get under way as part of Big Questions in Free Will, a four-year, US$4.4-million programme funded by the John Templeton Foundation in West Conshohocken, Pennsylvania, which supports research bridging theology, philosophy and natural science. Some say that, with refined experiments, neuroscience could help researchers to identify the physical processes underlying conscious intention and to better understand the brain activity that precedes it. And if unconscious brain activity could be found to predict decisions perfectly, the work really could rattle the notion of free will. “It’s possible that what are now correlations could at some point become causal connections between brain mechanisms and behaviours,” says Glannon. “If that were the case, then it would threaten free will, on any definition by any philosopher.” [Continue reading…]

Anesthesia may leave patients conscious — and finally show consciousness in the brain

Vaughan Bell writes: During surgery, a patient awakes but is unable to move. She sees people dressed in green who talk in strange slowed-down voices. There seem to be tombstones nearby and she assumes she is at her own funeral. Slipping back into oblivion, she awakes later in her hospital bed, troubled by her frightening experiences.

These are genuine memories from a patient who regained awareness during an operation. Her experiences are clearly a distorted version of reality but crucially, none of the medical team was able to tell she was conscious.

This is because medical tests for consciousness are based on your behavior. Essentially, someone talks to you or prods you, and if you don’t respond, you’re assumed to be out cold. Consciousness, however, is not defined as a behavioral response but as a mental experience. If I were completely paralyzed, I could still be conscious and I could still experience the world, even if I was unable to communicate this to anyone else.

This is obviously a pressing medical problem. Doctors don’t want people to regain awareness during surgery because the experiences may be frightening and even traumatic. But on a purely scientific level, these fine-grained alterations in our awareness may help us understand the neural basis of consciousness. If we could understand how these drugs alter the brain and could see when people flicker into consciousness, we could perhaps understand what circuits are important for consciousness itself. Unfortunately, surgical anesthesia is not an ideal way of testing this because several drugs are often used at once and some can affect memory, meaning that the patient could become conscious during surgery but not remember it afterwards, making it difficult to do reliable retrospective comparisons between brain function and awareness.

An attempt to solve this problem was behind an attention-grabbing new study, led by Valdas Noreika from the University of Turku in Finland, that investigated the extent to which common surgical anesthetics can leave us behaviorally unresponsive but subjectively conscious. [Continue reading…]

Why you don’t really have free will

Professor Jerry A. Coyne, from the Department of Ecology and Evolution at The University of Chicago, writes: Perhaps you’ve chosen to read this essay after scanning other articles on this website. Or, if you’re in a hotel, maybe you’ve decided what to order for breakfast, or what clothes you’ll wear today.

You haven’t. You may feel like you’ve made choices, but in reality your decision to read this piece, and whether to have eggs or pancakes, was determined long before you were aware of it — perhaps even before you woke up today. And your “will” had no part in that decision. So it is with all of our other choices: not one of them results from a free and conscious decision on our part. There is no freedom of choice, no free will. And those New Year’s resolutions you made? You had no choice about making them, and you’ll have no choice about whether you keep them.

The debate about free will, long the purview of philosophers alone, has been given new life by scientists, especially neuroscientists studying how the brain works. And what they’re finding supports the idea that free will is a complete illusion.

The issue of whether we have of free will is not an arcane academic debate about philosophy, but a critical question whose answer affects us in many ways: how we assign moral responsibility, how we punish criminals, how we feel about our religion, and, most important, how we see ourselves — as autonomous or automatons. [Continue reading…]

Avian mathematicians and musicians

Discovery News reports: Pigeons may be ubiquitous, but they’re also brainy, according to a new study that found these birds are on par with primates when it comes to numerical competence.

The study, published in the latest issue of the journal Science, discovered that pigeons can discriminate against different amounts of number-like objects, order pairs, and learn abstract mathematical rules. Aside from humans, only rhesus monkeys have exhibited equivalent skills.

“It would be fair to say that, even among birds, pigeons are not thought to be the sharpest crayon in the box,” lead author Damian Scarf told Discovery News. “I think that this ability may be widespread among birds. There is already clear evidence that it is widespread among primates.”

The neural pathways that allow pigeons to do math might be connected to the ones that enable the cockatoos below to dance. Both skills hinge on the ability to conceptualize uniform units — the most abstract representations of space and time.

Turning war into ‘peace’ by deleting and replacing memories

Imagine soldiers who couldn’t be traumatized; who could engage in the worst imaginable brutality and not only remember nothing, but remember something else, completely benign. That might just sound like dystopian science fiction, but ongoing research is laying the foundations to turn this into reality.

Alison Winter, author of Memory: Fragments of a Modern History, from which the following is adapted, writes:

The first speculative steps are now being taken in an attempt to develop techniques of what is being called “therapeutic forgetting.” Military veterans suffering from PTSD are currently serving as subjects in research projects on using propranolol to mitigate the effects of wartime trauma. Some veterans’ advocates criticize the project because they see it as a “metaphor” for how the “administration, Defense Department, and Veterans Affairs officials, not to mention many Americans, are approaching the problem of war trauma during the Iraq experience.”

The argument is that terrible combat experiences are “part of a soldier’s life” and are “embedded in our national psyche, too,” and that these treatments reflect an illegitimate wish to forget the pain suffered by war veterans. Tara McKelvey, who researched veterans’ attitudes to the research project, quoted one veteran as disapproving of the project on the grounds that “problems have to be dealt with.” This comment came from a veteran who spends time “helping other veterans deal with their ghosts, and he gives talks to high school and college students about war.” McKelvey’s informant felt that the definition of who he was “comes from remembering the pain and dealing with it — not from trying to forget it.” The assumption here is that treating the pain of war pharmacologically is equivalent to minimizing, discounting, disrespecting and ultimately setting aside altogether the sacrifices made by veterans, and by society itself. People who objected to the possibility of altering emotional memories with drugs were concerned that this amounted to avoiding one’s true problems instead of “dealing” with them. An artificial record of the individual past would by the same token contribute to a skewed collective memory of the costs of war.

In addition to the work with veterans, there have been pilot studies with civilians in emergency rooms. In 2002, psychiatrist Roger Pitman of Harvard took a group of 31 volunteers from the emergency rooms at Massachusetts General Hospital, all people who had suffered some traumatic event, and for 10 days treated some with a placebo and the rest with propranolol [a beta blocker]. Those who received propranolol later had no stressful physical response to reminders of the original trauma, while almost half of the others did. Should those E.R. patients have been worried about the possible legal implications of taking the drug? Could one claim to be as good a witness once one’s memory had been altered by propranolol? And in a civil suit, could the defense argue that less harm had been done, since the plaintiff had avoided much of the emotional damage that an undrugged victim would have suffered? Attorneys did indeed ask about the implications for witness testimony, damages, and more generally, a devaluation of harm to victims of crime. One legal scholar framed this as a choice between protecting memory “authenticity” (a category he used with some skepticism) and “freedom of memory.” Protecting “authenticity” could not be done without sacrificing our freedom to control our own minds, including our acts of recall.

The anxiety provoked by the idea of “memory dampening” is so intriguing that even the President’s Council on Bioethics, convened by President George W. Bush in his first term, thought the issue important enough to reflect on it alongside discussions of cloning and stem-cell research. Editing memories could “disconnect people from reality or their true selves,” the council warned. While it did not give a definition of “selfhood,” it did give examples of how such techniques could warp us by “falsifying our perception and understanding of the world.” The potential technique “risks making shameful acts seem less shameful, or terrible acts less terrible, than they really are.”

Meanwhile, David DiSalvo notes ten brain science studies from 2011 including this:

Brain Implant Enables Memories to be Recorded and Played Back

Neural prosthetics had a big year in 2011, and no development in this area was bigger than an implant designed to record and replay memories.

Researchers had a group of rats with the implant perform a simply memory task: get a drink of water by hitting one lever in a cage, then—after a distraction—hitting another. They had to remember which lever they’d already pushed to know which one to push the second time. As the rats did this memory task, electrodes in the implants recorded signals between two areas of their brains involved in storing new information in long-term memory.

The researchers then gave the rats a drug that kept those brain areas from communicating. The rats still knew they had to press one lever then the other to get water, but couldn’t remember which lever they’d already pressed. When researchers played back the neural signals they’d recorded earlier via the implants, the rats again remembered which lever they had hit, and pressed the other one. When researchers played back the signals in rats not on the drug (thus amplifying their normal memory) the rats made fewer mistakes and remembered which lever they’d pressed even longer.

The bottom line: This is ground-level research demonstrating that neural signals involved in memory can be recorded and replayed. Progress from rats to humans will take many years, but even knowing that it’s plausible is remarkable.

Honeybee democracy

Joseph Castro reports: Honeybees choose new nest sites by essentially head-butting each other into a consensus, shows a new study.

When scout bees find a new potential home, they do a waggle dance to broadcast to other scout bees where the nest is and how suitable it is for the swarm. The nest with the most support in the end becomes the swarm’s new home.

But new research shows another layer of complexity to the decision-making process: The bees deliver “stop signals” via head butts to scouts favoring a different site. With enough head butts, a scout bee will stop its dance, decreasing the apparent support for that particular nest.

This process of excitation (waggle dances) and inhibition (head butts) in the bee swarm parallels how a complex brain makes decisions using neurons, the researchers say.

“Other studies have suggested that there could be a close relationship between collective decision-making in a swarm of bees and the brain,” said Iain Couzin, an evolutionary biologist at Princeton University, who was not involved in the study.”

“But this [study] takes it to a new level by showing that a fundamental process that’s very important in human decision-making is similarly important to honeybee decision-making.”

When honeybees outgrow their hive, several thousand workers leave the nest with their mother queen to establish a new colony. A few hundred of the oldest, most experienced bees, called scout bees, fly out to find that new nest.

“They then run a popularity contest with a dance party,” said Thomas Seeley, a biologist at Cornell University and lead author of the new study. When a scout bee finds a potential nest site, it advertizes the site with a waggle dance, which points other scouts to the nest’s location. The bees carefully adjust how long they dance based on the quality of the site.”

“We thought it was just a race to see which group of scout bees could attract a threshold number of bees,” Seeley told LiveScience. [Bees Form Better Democracy]

But in 2009, Seeley learned that there might be more to the story. He discovered that a bee could produce a stop-dancing signal by butting its head against a dancer and making a soft beep sound with a flight muscle. An accumulation of these head butts would eventually cause the bee to stop dancing. Seeley observed that the colony used these stop signals to reduce the number of bees recruited to forage from a perilous food source, but he wondered if the bees also used the head butts during nest hunting.

Thomas Seeley talks about Honeybee Democracy: