Robinson Meyer writes: It’s sometimes said that modern science spends too much time on the documentation of a new trend and too little time on the replication of old ones. A new paper published Wednesday in the open-access journal Science Advances is important just because it does the latter. In fact, it sheds light on the scientific process in action—and also reveals how climate-change denialists can muddy that process.

Here’s the big takeaway from the new study: Across the planet, the ocean surface has been warming at a relatively steady clip over the past 50 years.

This warming trend shows up whether the ocean is measured by buoy, by satellite, or by autonomous floating drone. It also shows up in the global temperature dataset created and maintained by the National Oceanic and Atmospheric Administration (NOAA).

In fact, the warming shows up in both datasets in essentially the same way. This is important because it confirms the integrity of the NOAA dataset — and adds further evidence to the argument that ocean temperatures have steadily warmed this century without a significant slowdown.

“Our results essentially confirm that NOAA got it right,” says Zeke Hausfather, a researcher and economist at the University of California Berkeley. “They weren’t cooking the books. They weren’t bowing to any political pressure to find results that show extra warming. They were a bunch of scientists trying their hardest to work with messy data.”

Here’s why the finding matters: In June 2015, NOAA published an update to its long-running dataset of historical global temperatures. Thomas Karl, the director of the National Centers for Environmental Information, and his colleagues at NOAA explained in a paper in Science that the old database had a critical flaw. In trying to merge temperature readings taken by ships and buoys, NOAA had been allowing “cooling bias” to seep into its numbers.

In other words, NOAA’s global temperature estimates had been too low, and its measurement of climate change was too conservative. With this newly updated data in hand, Karl and his colleagues found there had been no slowdown in global warming during the 2000s.

NOAA’s new findings disagreed with those of the U.K. Met Office, whose widely used global temperature dataset does show a slowdown in the 2000s.

So: Was there a slowdown? This is an interesting problem of some scientific interest. Researchers have pointed to El Niño, to multiyear oceanic cycles, and to the post-Soviet reforestation of Russia as possible explanations for the change.

But here’s the thing: The slowdown, or lack thereof, never threw the larger phenomenon of human-caused climate change into question. In fact, even among the most conservative estimates, the globe kept warming right through the slowdown. The overwhelming consensus of Earth scientists is that the planet is harmfully warming due to human industrial activity. What’s more, if a slowdown did occur in the 2000s, it seems to have abated now. The previous three years — 2014, 2015, and 2016 — have all broken the record as the hottest year ever in the modern temperature record.

But this hasn’t seemed to matter in public debate, as climate-change denialists have found enormous success casting doubt on global warming by glomming onto this “slowdown” debate. [Continue reading…]

Category Archives: Science/Technology

NASA’s overlooked duty to look inward

Elisa Gabbert writes: In 1942, not long after the attack on Pearl Harbor, the poet Archibald MacLeish wrote an essay called “The Image of Victory,” in which he asked what winning the Second World War, the “airman’s war,” would mean for posterity. MacLeish believed that pilots could do more than bring victory; by literally rising above the conflicts on the ground, they could also reshape our very understanding of the planet. “Never in all their history have men been able truly to conceive of the world as one: a single sphere, a globe, having the qualities of a globe, a round earth in which all the directions eventually meet, in which there is no center because every point, or none, is center — an equal earth which all men occupy as equals,” he wrote. The airplane, he felt, was both an engine of perspective and a symbol of unity.

MacLeish could not, perhaps, have imagined the sight of a truly whole Earth. But, twenty-six years after his essay appeared, the three-man crew of Apollo 8 reached the highest vantage point in history, becoming the first humans to witness Earth rising over the surface of the moon. The most iconic photograph of our planet, popularly known as “The Blue Marble,” was taken by their successors on Apollo 17, in 1972. In it, Earth appears in crisp focus, brightly lit, as in studio portraiture, against a black backdrop. The picture clicked with the cultural moment. As the neuroscientist Gregory Petsko observed, in 2011, in an essay on the consciousness-shifting power of images, it became a symbol of the budding environmentalist movement. “Our whole planet suddenly, in this image, seemed tiny, vulnerable, and incredibly lonely against the vast blackness of the cosmos,” Petsko wrote. “Regional conflict and petty differences could be dismissed as trivial compared with environmental dangers that threatened all of humanity.” Apollo 17 marked America’s last mission to the moon, and the last time that humans left Earth’s orbit.

It was always part of NASA’s mission to look inward, not just outward. The National Aeronautics and Space Act of 1958, which established the agency, claimed as its first objective “the expansion of human knowledge of phenomena in the atmosphere and space.” NASA’s early weather satellites were followed, in the seventies and eighties, by a slew of more advanced instruments, which supplied data on the ozone layer, crops and vegetation, and even insect infestations. They allowed scientists to recognize and measure the symptoms of climate change, and their decades’ worth of data helped the Intergovernmental Panel on Climate Change conclude, in 2007, that global warming is “very likely” anthropogenic. According to a report released last month by NASA’s inspector general, the agency’s Earth Science Division helps commercial, government, and military organizations around the world locate areas at risk for storm-related flooding, predict malaria outbreaks, develop wildfire models, assess air quality, identify remote volcanoes whose toxic emissions contribute to acid rain, and determine the precise length of a day. [Continue reading…]

Why does NASA study Earth?

Why do we study our home planet? 'Cause we're the space agency! Learn more about our Earth science missions: https://t.co/5EDqdCUwlh pic.twitter.com/VDaKpCZWwD

— NASA Earth (@NASAEarth) December 20, 2016

Learn more about NASA’s Earth science missions.

Donald Trump’s war on science

Lawrence M. Krauss writes: Last week, the Space, Science, and Technology subcommittee of the House of Representatives tweeted a misleading story from Breitbart News: “Global Temperatures Plunge. Icy Silence from Climate Alarmists.” (There is always some drop in temperature when El Niño transitions into La Niña — but there has been no anomalous plunge.) Under normal circumstances, this tweet wouldn’t be so surprising: Lamar Smith, the chair of the committee since 2013, is a well-known climate-change denier. But these are not normal times. The tweet is best interpreted as something new: a warning shot. It’s a sign of things to come — a declaration of the Trump Administration’s intent to sideline science.

In a 1946 essay, George Orwell wrote that “to see what is in front of one’s nose needs a constant struggle.” It’s not just that we’re easily misled. It’s that, by “impudently twisting the facts,” we can convince ourselves of “things which we know to be untrue.” A whole society, he wrote, can deceive itself “for an indefinite time,” and the only check on that mass delusion is that “sooner or later a false belief bumps up against solid reality.” Science is one source of that solid reality. The Trump Administration seems determined to keep it at bay, and the consequences for society and the environment will be profound.

The first sign of Trump’s intention to spread lies about empirical reality, “1984”-style, was, of course, the appointment of Steve Bannon, the former executive chairman of the Breitbart News Network, as Trump’s “senior counselor and strategist.” This year, Breitbart hosted stories with titles such as “1001 Reasons Why Global Warming Is So Totally Over in 2016,” despite the fact that 2016 is now overwhelmingly on track to be the hottest year on record, beating 2015, which beat 2014, which beat 2013. Such stories do more than spread disinformation. Their purpose is the creation of an alternative reality — one in which scientific evidence is a sham — so that hyperbole and fearmongering can divide and conquer the public. [Continue reading…]

Trump questionnaire recalls dark history of ideology-driven science

By Paul N. Edwards, University of Michigan

President-elect Trump has called global warming “bullshit” and a “Chinese hoax.” He has promised to withdraw from the 2015 Paris climate treaty and to “bring back coal,” the world’s dirtiest, most carbon-intensive fuel. The incoming administration has paraded a roster of climate change deniers for top jobs. On Dec. 13, Trump named former Texas Governor Rick Perry, another climate change denier, to lead the Department of Energy (DoE), an agency Perry said he would eliminate altogether during his 2011 presidential campaign.

Just days earlier, the Trump transition team presented the DoE with a 74-point questionnaire that has raised alarm among employees because the questions appear to target people whose work is related to climate change.

For me, as a historian of science and technology, the questionnaire – bluntly characterized by one DoE official as a “hit list” – is starkly reminiscent of the worst excesses of ideology-driven science, seen everywhere from the U.S. Red Scare of the 1950s to the Soviet and Nazi regimes of the 1930s.

The questionnaire asks for a list of “all DoE employees or contractors” who attended the annual Conferences of Parties to the United Nations Framework Convention on Climate Change – a binding treaty commitment of the U.S., signed by George H. W. Bush in 1992. Another question seeks the names of all employees involved in meetings of the Interagency Working Group on the Social Cost of Carbon, responsible for technical guidance quantifying the economic benefits of avoided climate change.

It also targets the scientific staff of DoE’s national laboratories. It requests lists of all professional societies scientists belong to, all their publications, all websites they maintain or contribute to, and “all other positions… paid and unpaid,” which they may hold. These requests, too, are likely aimed at climate scientists, since most of the national labs conduct research related to climate change, including climate modeling, data analysis and data storage.

How Trump could wage a war on scientific expertise

Ed Yong writes: In September, the U.S. Food and Drug Administration banned 19 common chemicals from common antibacterial washes, because manufacturers hadn’t shown that they were safe in the long run, or any better than plain soap and water. In October, the Environmental Protection Agency (EPA) updated a rule forcing dozens of states to reduce levels of ozone and other air pollutants coming out of power plants — a move that would protect hundreds of millions of Americans from lung diseases. In the same month, the EPA and the United National Highway Traffic Safety Administration enacted a rule that limits the carbon dioxide emissions from heavy-duty vehicles like trucks and tractors.

In a few months, these regulations could vanish, along with over 100 others designed to protect the health, safety, and welfare of Americans.

To an extent, regulations are necessary. Laws like the Clean Air Act, Clean Water Act, Occupational Safety and Health Act, and many others have been instrumental in improving health, saving lives, and protecting the environment. These rules are multiplying. Their opponents argue that they limit businesses, stifle innovation, add red tape, and cost jobs. Their defenders say that they boost efficiency, create employment in new sectors, and are moral imperatives regardless of costs.

It is clear where president-elect Donald Trump stands. “The monstrosity that is the Federal Government with its pages and pages of rules and regulations has been a disaster for the American economy and job growth,” he said during his campaign. Come January, he will have the power to take on that perceived monster. [Continue reading…]

NASA’s indispensable role in climate research

Adam Frank writes: On April 1, 1960, the newly established National Aeronautics and Space Administration heaved a 270-pound box of electronics into Earth orbit. In those days, getting anything into space was a major achievement. But the real significance of that early satellite, Tiros-1, was not its survival, but its mission: Its sensors were not pointed outward toward deep space, but downward, at the Earth.

Tiros-1 was the first world’s first weather satellite. After its launch, Americans would never again be caught without warning as storms approached.

This small piece of history says a lot about the call by Bob Walker, an adviser to President-elect Donald J. Trump who worked with his campaign on space policy, to defund NASA’s earth science efforts, moving those functions to other agencies and letting it focus on deep-space research. “Earth-centric science is better placed at other agencies where it is their prime mission,” he told The Guardian.

NASA critics have long wanted to shut the agency out of research related to climate change. The problem is, not only is earth science a long-running part of NASA’s “prime mission,” but it is uniquely positioned to do it. Without NASA, climate research worldwide would be hobbled. [Continue reading…]

Dino-killing asteroid may have punctured Earth’s crust

Live Science reports: After analyzing the crater from the cosmic impact that ended the age of dinosaurs, scientists now say the object that smacked into the planet may have punched nearly all the way through Earth’s crust, according to a new study.

The finding could shed light on how impacts can reshape the faces of planets and how such collisions can generate new habitats for life, the researchers said.

Asteroids and comets occasionally pelt Earth’s surface. Still, for the most part, changes to the planet’s surface result largely from erosion due to rain and wind, “as well as plate tectonics, which generates mountains and ocean trenches,” said study co-author Sean Gulick, a marine geophysicist at the University of Texas at Austin.

In contrast, on the solar system’s other rocky planets, erosion and plate tectonics typically have little, if any, influence on the planetary surfaces. “The key driver of surface changes on those planets is constantly getting hit by stuff from space,” Gulick told Live Science. [Continue reading…]

Trump to scrap NASA climate research in crackdown on ‘politicized science’

The Guardian reports: Donald Trump is poised to eliminate all climate change research conducted by Nasa as part of a crackdown on “politicized science”, his senior adviser on issues relating to the space agency has said.

Nasa’s Earth science division is set to be stripped of funding in favor of exploration of deep space, with the president-elect having set a goal during the campaign to explore the entire solar system by the end of the century.

This would mean the elimination of Nasa’s world-renowned research into temperature, ice, clouds and other climate phenomena. Nasa’s network of satellites provide a wealth of information on climate change, with the Earth science division’s budget set to grow to $2bn next year. By comparison, space exploration has been scaled back somewhat, with a proposed budget of $2.8bn in 2017.

Bob Walker, a senior Trump campaign adviser, said there was no need for Nasa to do what he has previously described as “politically correct environmental monitoring”. [Continue reading…]

The moment when science went modern

Lorraine Daston writes: The history of science is punctuated by not one, not two, but three modernities: the first, in the seventeenth century, known as “the Scientific Revolution”; the second, circa 1800, often referred to as “the second Scientific Revolution”; and the third, in the first quarter of the twentieth century, when relativity theory and quantum mechanics not only overturned the achievements of Galileo and Newton but also challenged our deepest intuitions about space, time, and causation.

Each of these moments transformed science, both as a body of knowledge and as a social and political force. The first modernity of the seventeenth century displaced the Earth from the center of the cosmos, showered Europeans with new discoveries, from new continents to new planets, created new forms of inquiry such as field observation and the laboratory experiment, added prediction to explanation as an ideal toward which science should strive, and unified the physics of heaven and earth in Newton’s magisterial synthesis that served as the inspiration for the political reformers and revolutionaries of the Enlightenment. The second modernity of the early nineteenth century unified light, heat, electricity, magnetism, and gravitation into the single, fungible currency of energy, put that energy to work by creating the first science-based technologies to become gigantic industries (e.g., the manufacture of dyestuffs from coal tar derivatives), turned science into a salaried profession and allied it with state power in every realm, from combating epidemics to waging wars. The third modernity, of the early twentieth century, toppled the certainties of Newton and Kant, inspired the avant-garde in the arts, and paved the way for what were probably the two most politically consequential inventions of the last hundred years: the mass media and the atomic bomb.

The aftershocks of all three of these earthquakes of modernity are still reverberating today: in heated debates, from Saudi Arabia to Sri Lanka to Senegal, about the significance of the Enlightenment for human rights and intellectual freedom; in the assessment of how science-driven technology and industrialization may have altered the climate of the entire planet; in anxious negotiations about nuclear disarmament and utopian visions of a global polity linked by the worldwide Net. No one denies the world-shaking and world-making significance of any of these three moments of scientific modernity.

Yet from the perspective of the scientists themselves, the experience of modernity coincides with none of these seismic episodes. The most unsettling shift in scientific self-understanding — about what science was and where it was going — began in the middle decades of the nineteenth century, reaching its climax circa 1900. It was around that time that scientists began to wonder uneasily about whether scientific progress was compatible with scientific truth. If advances in knowledge were never-ending, could any scientific theory or empirical result count as real knowledge — true forever and always? Or was science, like the monarchies of Europe’s anciens régimes and the boundaries of its states and principalities, doomed to perpetual revision and revolution? [Continue reading…]

Humans aren’t the only primates that can make sharp stone tools

The Guardian reports: Monkeys have been observed producing sharp stone flakes that closely resemble the earliest known tools made by our ancient relatives, proving that this ability is not uniquely human.

Previously, modifying stones to create razor-edged fragments was thought to be an activity confined to hominins, the family including early humans and their more primitive cousins. The latest observations re-write this view, showing that monkeys unintentionally produce almost identical artefacts simply by smashing stones together.

The findings put archaeologists on alert that they can no longer assume that stone flakes they discover are linked to the deliberate crafting of tools by early humans as their brains became more sophisticated.

Tomos Proffitt, an archaeologist at the University of Oxford and the study’s lead author, said: “At a very fundamental level – if you’re looking at a very simple flake – if you had a capuchin flake and a human flake they would be the same. It raises really important questions about what level of cognitive complexity is required to produce a sophisticated cutting tool.”

Unlike early humans, the flakes produced by the capuchins were the unintentional byproduct of hammering stones – an activity that the monkeys pursued decisively, but the purpose of which was not clear. Originally scientists thought the behaviour was a flamboyant display of aggression in response to an intruder, but after more extensive observations the monkeys appeared to be seeking out the quartz dust produced by smashing the rocks, possibly because it has a nutritional benefit. [Continue reading…]

Great Barrier Reef obituary goes viral, to the horror of scientists

Huffington Post reports: Dead and dying are two very different things.

If a person is diagnosed with a life-threatening illness, their loved ones don’t rush to write an obituary and plan a funeral. Likewise, species aren’t declared extinct until they actually are.

In a viral article entitled “Obituary: Great Barrier Reef (25 Million BC-2016),” however, writer Rowan Jacobsen proclaimed ― inaccurately and, we can only hope, hyperbolically ― that Earth’s largest living structure is dead and gone.

“The Great Barrier Reef of Australia passed away in 2016 after a long illness,” reads the sensational obituary, published Tuesday in Outside Magazine. “It was 25 million years old.”

There’s no denying the Great Barrier Reef is in serious trouble, having been hammered in recent years by El Niño and climate change. In April, scientists from the Australian Research Council’s Centre of Excellence for Coral Reef Studies found that the most severe coral bleaching event on record had impacted 93 percent of the reef.

But as a whole, it is not dead. Preliminary findings published Thursday of Great Barrier Reef Marine Park Authority surveys show 22 percent of its coral died from the bleaching event. That leaves more than three quarters still alive ― and in desperate need of relief.

Two leading coral scientists that The Huffington Post contacted took serious issue with Outside’s piece, calling it wildly irresponsible. [Continue reading…]

There may be two trillion other galaxies

Brian Gallagher writes: In 1939, the year Edwin Hubble won the Benjamin Franklin award for his studies of “extra-galactic nebulae,” he paid a visit to an ailing friend. Depressed and interred at Las Encinas Hospital, a mental health facility, the friend, an actor and playwright named John Emerson, asked Hubble what — spiritually, cosmically — he believed in. In Edwin Hubble: Mariner of the Nebulae, Gale E. Christianson writes that Hubble, a Christian-turned-agnostic, “pulled no punches” in his reply. “The whole thing is so much bigger than I am,” he told Emerson, “and I can’t understand it, so I just trust myself to it, and forget about it.”

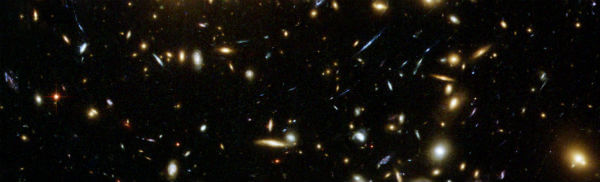

Even though he was moved by a sense of the universe’s immensity, it’s arresting to recall how small Hubble thought the cosmos was at the time. “The picture suggested by the reconnaissance,” he wrote in his 1937 book, The Observational Approach to Cosmology, “is a sphere, centred on the observer, about 1,000 million light-years in diameter, throughout which are scattered about 100 million nebulae,” or galaxies. “A suitable model,” he went on, “would be furnished by tennis balls, 50 feet apart, scattered through a sphere 5 miles in diameter.” From the instrument later named after him, the Hubble Space Telescope, launched in 1990, we learned from a series of pictures taken, starting five years later, just how unsuitable that model was.

The first is called the Hubble Deep Field, arguably “the most important image ever taken” according to this YouTube video. (I recommend watching it.) The Hubble gazed, for ten days, at an apparently empty spot in the sky, one about the size of a pinhead held up at arm’s length — a fragment one 24-millionth of the whole sky. The resulting picture had 3,000 objects, almost all of them galaxies in various stages of development, and many of them as far away as 12 billion light-years. Robert Williams, the former director of the Space Telescope Science Institute, wrote in the New York Times, “The image is really a core sample of the universe.” Next came the Ultra Deep Field, in 2003 (after a three-month exposure with a new camera, the Hubble image came back with 10,000 galaxies), then the eXtreme Deep Field, in 2012, a refined version of the Ultra that reveals galaxies that formed just 450 million years after the Big Bang. [Continue reading…]

A plan to defend against the war on science

Shawn Otto writes: Four years ago in Scientific American, I warned readers of a growing problem in American democracy. The article, entitled “Antiscience Beliefs Jeopardize U.S. Democracy,” charted how it had not only become acceptable, but often required, for politicians to embrace antiscience positions, and how those positions flew in the face of the core principles that the U.S. was founded on: That if anyone could discover the truth of something for him or herself using the tools of science, then no king, no pope and no wealthy lord was more entitled to govern the people than they were themselves. It was self-evident.

In the years since, the situation has gotten worse. We’ve seen the emergence of a “post-fact” politics, which has normalized the denial of scientific evidence that conflicts with the political, religious or economic agendas of authority. Much of this denial centers, now somewhat predictably, around climate change — but not all. If there is a single factor to consider as a barometer that evokes all others in this election, it is the candidates’ attitudes toward science.

Consider, for example, what has been occurring in Congress. Rep. Lamar Smith, the Texas Republican who chairs the House Committee on Science, Space and Technology, is a climate change denier. Smith has used his post to initiate a series of McCarthy-style witch-hunts, issuing subpoenas and demanding private correspondence and testimony from scientists, civil servants, government science agencies, attorneys general and nonprofit organizations whose work shows that global warming is happening, humans are causing it and that — surprise — energy companies sought to sow doubt about this fact.

Smith, who is a Christian Scientist and seems to revel in his role as the science community’s bête noire, is by no means alone. Climate denial has become a virtual Republican Party plank (and rejecting the Paris climate accord a literal one) with a wide majority of Congressional Republicans espousing it. Sen. Ted Cruz (R–Texas), chairman of the Senate’s Subcommittee on Space, Science and Competitiveness, took time off from his presidential campaign last December to hold hearings during the Paris climate summit showcasing well-known climate deniers repeating scientifically discredited talking points.

The situation around science has grown so partisan that Hillary Clinton turned the phrase “I believe in science” into the largest applause line of her convention speech accepting the Democratic Party nomination. Donald Trump, by contrast, is the first major party presidential nominee who is an outright climate denier, having called climate science a “hoax” numerous times. In his responses to the organization I helped found, ScienceDebate.org, which gets presidential candidates on the record on science, he told us that “there is still much that needs to be investigated in the field of ‘climate change,’” putting the term in scare quotes to cast doubt on its reality. When challenged on his hoax comments, campaign manager Kellyanne Conway affirmed that Trump doesn’t believe climate change is man-made. [Continue reading…]

Rewriting Earth’s creation story

Rebecca Boyle writes: Humanity’s trips to the moon revolutionized our view of this planet. As seen from another celestial body, Earth seemed more fragile and more precious; the iconic Apollo 8 image of Earth rising above the lunar surface helped launch the modern environmental movement. The moon landings made people want to take charge of Earth’s future. They also changed our view of its past.

Earth is constantly remaking itself, and over the eons it has systematically erased its origin story, subsuming and cannibalizing its earliest rocks. Much of what we think we know about the earliest days of Earth therefore comes from the geologically inactive moon, which scientists use like a time capsule.

Ever since Apollo astronauts toted chunks of the moon back home, the story has sounded something like this: After coalescing from grains of dust that swirled around the newly ignited sun, the still-cooling Earth would have been covered in seas of magma, punctured by inky volcanoes spewing sulfur and liquid rock. The young planet was showered in asteroids and larger structures called planetisimals, one of which sheared off a portion of Earth and formed the moon. Just as things were finally settling down, about a half-billion years after the solar system formed, the Earth and moon were again bombarded by asteroids whose onslaught might have liquefied the young planet — and sterilized it.

Geologists named this epoch the Hadean, after the Greek version of the underworld. Only after the so-called Late Heavy Bombardment quieted some 3.9 billion years ago did Earth finally start to morph into the Edenic, cloud-covered, watery world we know.

But as it turns out, the Hadean may not have been so hellish. New analysis of Earth and moon rocks suggest that instead of a roiling ball of lava, baby Earth was a world with continents, oceans of water, and maybe even an atmosphere. It might not have been bombarded by asteroids at all, or at least not in the large quantities scientists originally thought. The Hadean might have been downright hospitable, raising questions about how long ago life could have arisen on this planet. [Continue reading…]

Sesame particle accelerator project brings Middle East together

The Guardian reports: In the sleepy hillside town in al-Balqa, not far from the Jordan Valley, a grand project is taking shape. The Middle East’s new particle accelerator – the Synchrotron-Light for Experimental Science and Applications, or Sesame – is being built.

In a region racked by violence, extremism and the disintegration of nation states, Sesame feels a world apart; the meditative peace of the surrounding countryside belying the advanced stages of construction inside the site, which is due to be formally inaugurated next spring, with the first experiments taking place as early as this autumn.

It’s a miracle it got off the ground in the first place. Sesame’s members are Iran, Pakistan, Israel, Turkey, Cyprus, Egypt, the Palestinian Authority, Jordan and Bahrain. Iran and Pakistan do not recognise Israel, nor does Turkey recognise Cyprus, and everyone has their myriad diplomatic spats.

Iran, for example, continues to participate despite two of its scientists who were involved in the project, quantum physicist Masoud Alimohammadi and nuclear scientist Majid Shahriari, being assassinated in operations blamed on Israel’s Mossad.

“We’re cooperating very well together,” said Giorgio Paolucci, the scientific director of Sesame. “That’s the dream.” [Continue reading…]

Forget software — now hackers are exploiting physics

Andy Greenberg reports: Practically every word we use to describe a computer is a metaphor. “File,” “window,” even “memory” all stand in for collections of ones and zeros that are themselves representations of an impossibly complex maze of wires, transistors and the electrons moving through them. But when hackers go beyond those abstractions of computer systems and attack their actual underlying physics, the metaphors break.

Over the last year and a half, security researchers have been doing exactly that: honing hacking techniques that break through the metaphor to the actual machine, exploiting the unexpected behavior not of operating systems or applications, but of computing hardware itself—in some cases targeting the actual electricity that comprises bits of data in computer memory. And at the Usenix security conference earlier this month, two teams of researchers presented attacks they developed that bring that new kind of hack closer to becoming a practical threat.

Both of those new attacks use a technique Google researchers first demonstrated last March called “Rowhammer.” The trick works by running a program on the target computer, which repeatedly overwrites a certain row of transistors in its DRAM flash memory, “hammering” it until a rare glitch occurs: Electric charge leaks from the hammered row of transistors into an adjacent row. The leaked charge then causes a certain bit in that adjacent row of the computer’s memory to flip from one to zero or vice versa. That bit flip gives you access to a privileged level of the computer’s operating system.

It’s messy. And mind-bending. And it works. [Continue reading…]

Forget ideology, liberal democracy’s newest threats come from technology and bioscience

John Naughton writes: The BBC Reith Lectures in 1967 were given by Edmund Leach, a Cambridge social anthropologist. “Men have become like gods,” Leach began. “Isn’t it about time that we understood our divinity? Science offers us total mastery over our environment and over our destiny, yet instead of rejoicing we feel deeply afraid.”

That was nearly half a century ago, and yet Leach’s opening lines could easily apply to today. He was speaking before the internet had been built and long before the human genome had been decoded, and so his claim about men becoming “like gods” seems relatively modest compared with the capabilities that molecular biology and computing have subsequently bestowed upon us. Our science-based culture is the most powerful in history, and it is ceaselessly researching, exploring, developing and growing. But in recent times it seems to have also become plagued with existential angst as the implications of human ingenuity begin to be (dimly) glimpsed.

The title that Leach chose for his Reith Lecture – A Runaway World – captures our zeitgeist too. At any rate, we are also increasingly fretful about a world that seems to be running out of control, largely (but not solely) because of information technology and what the life sciences are making possible. But we seek consolation in the thought that “it was always thus”: people felt alarmed about steam in George Eliot’s time and got worked up about electricity, the telegraph and the telephone as they arrived on the scene. The reassuring implication is that we weathered those technological storms, and so we will weather this one too. Humankind will muddle through.

But in the last five years or so even that cautious, pragmatic optimism has begun to erode. There are several reasons for this loss of confidence. One is the sheer vertiginous pace of technological change. Another is that the new forces at loose in our society – particularly information technology and the life sciences – are potentially more far-reaching in their implications than steam or electricity ever were. And, thirdly, we have begun to see startling advances in these fields that have forced us to recalibrate our expectations.[Continue reading…]