Financial Times reports: UK-based insurer Admiral has come up with a way to crunch through social media posts to work out who deserves a lower premium. People who seem cautious and deliberate in their choice of words are likely to pay a lot less than those with overconfident remarks. [Continue reading…]

Category Archives: Attention to the Unseen

Iraqis are world’s most generous to strangers

Reuters reports: Although torn by civil war, Iraq is the world’s most generous country towards strangers in need, according to a new global index of charitable giving.

Eighty one percent of Iraqis reported helping someone they didn’t know in the previous month, in a global poll commissioned by the Charities Aid Foundation (CAF).

For the first time since CAF began the poll in 2010, more than half of people in 140 countries surveyed said they had helped strangers – with many of the most generous found in countries hit hard by disaster and war. [Continue reading…]

Science shows the richer you get, the less you pay attention to other people

Lila MacLellan writes: No one can pay attention to everything they encounter. We simply do not have enough time or mental capacity for it. Most of us, though, do make an effort to acknowledge our fellow humans. Wealth, it seems, might change that.

There’s a growing body of research showing how having money changes the way people see — or are oblivious to — others and their problems. The latest is a paper published in the journal Psychological Science in which psychologists at New York University show that wealthy people unconsciously pay less attention to passersby on the street.

In the paper, the researchers describe experiments they conducted to measure the effects of social class on what’s called the “motivational relevance” of other human beings. According to some schools of psychological thought, we’re motivated to pay attention to something when we assign more value to it, whether because it threatens us or offers the potential for some kind of reward. [Continue reading…]

Our slow, uncertain brains are still better than computers — here’s why

By Parashkev Nachev, UCL

Automated financial trading machines can make complex decisions in a thousandth of a second. A human being making a choice – however simple – can never be faster than about one-fifth of a second. Our reaction times are not only slow but also remarkably variable, ranging over hundreds of milliseconds.

Is this because our brains are poorly designed, prone to random uncertainty – or “noise” in the electronic jargon? Measured in the laboratory, even the neurons of a fly are both fast and precise in their responses to external events, down to a few milliseconds. The sloppiness of our reaction times looks less like an accident than a built-in feature. The brain deliberately procrastinates, even if we ask it to do otherwise.

Massively parallel wetware

Why should this be? Unlike computers, our brains are massively parallel in their organisation, concurrently running many millions of separate processes. They must do this because they are not designed to perform a specific set of actions but to select from a vast repertoire of alternatives that the fundamental unpredictability of our environment offers us. From an evolutionary perspective, it is best to trust nothing and no one, least of all oneself. So before each action the brain must flip through a vast Rolodex of possibilities. It is amazing it can do this at all, let alone in a fraction of a second.

But why the variability? There is hierarchically nothing higher than the brain, so decisions have to arise through peer-to-peer interactions between different groups of neurons. Since there can be only one winner at any one time – our movements would otherwise be chaotic – the mode of resolution is less negotiation than competition: a winner-takes-all race. To ensure the competition is fair, the race must run for a minimum length of time – hence the delay – and the time it takes will depend on the nature and quality of the field of competitors, hence the variability.

Fanciful though this may sound, the distributions of human reaction times, across different tasks, limbs, and people, have been repeatedly shown to fit the “race” model remarkably well. And one part of the brain – the medial frontal cortex – seems to track reaction time tightly, as an area crucial to procrastination ought to. Disrupting the medial frontal cortex should therefore disrupt the race, bringing it to an early close. Rather than slowing us down, disrupting the brain should here speed us up, accelerating behaviour but at the cost of less considered actions.

Humans aren’t the only primates that can make sharp stone tools

The Guardian reports: Monkeys have been observed producing sharp stone flakes that closely resemble the earliest known tools made by our ancient relatives, proving that this ability is not uniquely human.

Previously, modifying stones to create razor-edged fragments was thought to be an activity confined to hominins, the family including early humans and their more primitive cousins. The latest observations re-write this view, showing that monkeys unintentionally produce almost identical artefacts simply by smashing stones together.

The findings put archaeologists on alert that they can no longer assume that stone flakes they discover are linked to the deliberate crafting of tools by early humans as their brains became more sophisticated.

Tomos Proffitt, an archaeologist at the University of Oxford and the study’s lead author, said: “At a very fundamental level – if you’re looking at a very simple flake – if you had a capuchin flake and a human flake they would be the same. It raises really important questions about what level of cognitive complexity is required to produce a sophisticated cutting tool.”

Unlike early humans, the flakes produced by the capuchins were the unintentional byproduct of hammering stones – an activity that the monkeys pursued decisively, but the purpose of which was not clear. Originally scientists thought the behaviour was a flamboyant display of aggression in response to an intruder, but after more extensive observations the monkeys appeared to be seeking out the quartz dust produced by smashing the rocks, possibly because it has a nutritional benefit. [Continue reading…]

The vulnerability of monolingual Americans in an English-speaking world

Ivan Krastev writes: In our increasingly Anglophone world, Americans have become nakedly transparent to English speakers everywhere, yet the world remains bafflingly and often frighteningly opaque to monolingual Americans. While the world devours America’s movies and follows its politics closely, Americans know precious little about how non-Americans think and live. Americans have never heard of other countries’ movie stars and have only the vaguest notions of what their political conflicts are about.

This gross epistemic asymmetry is a real weakness. When WikiLeaks revealed the secret cables of the American State Department or leaked the emails of the Clinton campaign, it became a global news sensation and a major embarrassment for American diplomacy. Leaking Chinese diplomatic cables or Russian officials’ emails could never become a worldwide human-interest story, simply because only a relative handful of non-Chinese or non-Russians could read them, let alone make sense of them. [Continue reading…]

Although I’m pessimistic about the prospects of the meek inheriting the earth, the bi-lingual are in a very promising position. And Anglo-Americans should never forget that this is after all a country with a Spanish name. As for where I stand personally, I’m with the bi-lingual camp in spirit even if my own claim to be bi-lingual is a bit tenuous — an English-speaker who understands American-English but speaks British-English; does that count?

Where did the first farmers live? Looking for answers in DNA

Carl Zimmer writes: Beneath a rocky slope in central Jordan lie the remains of a 10,000-year-old village called Ain Ghazal, whose inhabitants lived in stone houses with timber roof beams, the walls and floors gleaming with white plaster.

Hundreds of people living there worshiped in circular shrines and made haunting, wide-eyed sculptures that stood three feet high. They buried their cherished dead under the floors of their houses, decapitating the bodies in order to decorate the skulls.

But as fascinating as this culture was, something else about Ain Ghazal intrigues archaeologists more: It was one of the first farming villages to have emerged after the dawn of agriculture.

Around the settlement, Ain Ghazal farmers raised barley, wheat, chickpeas and lentils. Other villagers would leave for months at a time to herd sheep and goats in the surrounding hills.

Sites like Ain Ghazal provide a glimpse of one of the most important transitions in human history: the moment that people domesticated plants and animals, settled down, and began to produce the kind of society in which most of us live today.

But for all that sites like Ain Ghazal have taught archaeologists, they are still grappling with enormous questions. Who exactly were the first farmers? How did agriculture, a cornerstone of civilization itself, spread to other parts of the world?

Some answers are now emerging from a surprising source: DNA extracted from skeletons at Ain Ghazal and other early settlements in the Near East. These findings have already challenged long-held ideas about how agriculture and domestication arose. [Continue reading…]

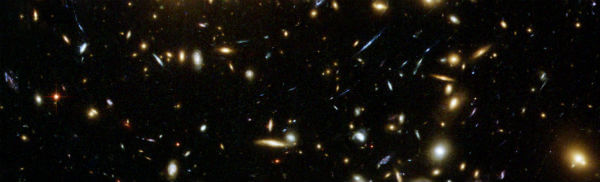

There may be two trillion other galaxies

Brian Gallagher writes: In 1939, the year Edwin Hubble won the Benjamin Franklin award for his studies of “extra-galactic nebulae,” he paid a visit to an ailing friend. Depressed and interred at Las Encinas Hospital, a mental health facility, the friend, an actor and playwright named John Emerson, asked Hubble what — spiritually, cosmically — he believed in. In Edwin Hubble: Mariner of the Nebulae, Gale E. Christianson writes that Hubble, a Christian-turned-agnostic, “pulled no punches” in his reply. “The whole thing is so much bigger than I am,” he told Emerson, “and I can’t understand it, so I just trust myself to it, and forget about it.”

Even though he was moved by a sense of the universe’s immensity, it’s arresting to recall how small Hubble thought the cosmos was at the time. “The picture suggested by the reconnaissance,” he wrote in his 1937 book, The Observational Approach to Cosmology, “is a sphere, centred on the observer, about 1,000 million light-years in diameter, throughout which are scattered about 100 million nebulae,” or galaxies. “A suitable model,” he went on, “would be furnished by tennis balls, 50 feet apart, scattered through a sphere 5 miles in diameter.” From the instrument later named after him, the Hubble Space Telescope, launched in 1990, we learned from a series of pictures taken, starting five years later, just how unsuitable that model was.

The first is called the Hubble Deep Field, arguably “the most important image ever taken” according to this YouTube video. (I recommend watching it.) The Hubble gazed, for ten days, at an apparently empty spot in the sky, one about the size of a pinhead held up at arm’s length — a fragment one 24-millionth of the whole sky. The resulting picture had 3,000 objects, almost all of them galaxies in various stages of development, and many of them as far away as 12 billion light-years. Robert Williams, the former director of the Space Telescope Science Institute, wrote in the New York Times, “The image is really a core sample of the universe.” Next came the Ultra Deep Field, in 2003 (after a three-month exposure with a new camera, the Hubble image came back with 10,000 galaxies), then the eXtreme Deep Field, in 2012, a refined version of the Ultra that reveals galaxies that formed just 450 million years after the Big Bang. [Continue reading…]

Guns, empires and Indians

David J Silverman writes: It has become commonplace to attribute the European conquest of the Americas to Jared Diamond’s triumvirate of guns, germs and steel. Germs refer to plague, measles, flu, whooping cough and, especially, the smallpox that whipsawed through indigenous populations, sometimes with a mortality rate of 90 per cent. The epidemics left survivors ill-equipped to fend off predatory encroachments, either from indigenous or from European peoples, who seized captives, land and plunder in the wake of these diseases.

Guns and steel, of course, represent Europeans’ technological prowess. Metal swords, pikes, armour and firearms, along with ships, livestock and even wheeled carts, gave European colonists significant military advantages over Native American people wielding bows and arrows, clubs, hatchets and spears. The attractiveness of such goods also meant that Indians desired trade with Europeans, despite the danger the newcomers represented. The lure of trade enabled Europeans to secure beachheads on the East Coast of North America, and make inroads to the interior of the continent. Intertribal competition for European trade also enabled colonists to employ ‘divide and conquer’ strategies against much larger indigenous populations.

Diamond’s explanation has grown immensely popular and influential. It appears to be a simple and sweeping teleology providing order and meaning to the complexity of the European conquest of the Western hemisphere. The guns, germs and steel perspective has helped further understanding of some of the major forces behind globalisation. But it also involves a level of abstraction that risks obscuring the history of individuals and groups whose experiences cannot be so aptly and neatly summarised. [Continue reading…]

The more you know about a topic the more likely you are to have false memories about it

By Ciara Greene, University College Dublin

Human memory does not operate like a video tape that can be rewound and rewatched, with every viewing revealing the same events in the same order. In fact, memories are reconstructed every time we recall them. Aspects of the memory can be altered, added or deleted altogether with each new recollection. This can lead to the phenomenon of false memory, where people have clear memories of an event that they never experienced.

False memory is surprisingly common, but a number of factors can increase its frequency. Recent research in my lab shows that being very interested in a topic can make you twice as likely to experience a false memory about that topic.

Previous research has indicated that experts in a few clearly defined fields, such as investments and American football, might be more likely to experience false memory in relation to their areas of expertise. Opinion as to the cause of this effect is divided. Some researchers have suggested that greater knowledge makes a person more likely to incorrectly recognise new information that is similar to previously experienced information. Another interpretation suggests that experts feel that they should know everything about their topic of expertise. According to this account, experts’ sense of accountability for their judgements causes them to “fill in the gaps” in their knowledge with plausible, but false, information.

To further investigate this, we asked 489 participants to rank seven topics from most to least interesting. The topics we used were football, politics, business, technology, film, science and pop music. The participants were then asked if they remembered the events described in four news items about the topic they selected as the most interesting, and four items about the topic selected as least interesting. In each case, three of the events depicted had really happened and one was fictional.

The results showed that being interested in a topic increased the frequency of accurate memories relating to that topic. Critically, it also increased the number of false memories – 25% of people experienced a false memory in relation to an interesting topic, compared with 10% in relation to a less interesting topic. Importantly, our participants were not asked to identify themselves as experts, and did not get to choose which topics they would answer questions about. This means that the increase in false memories is unlikely to be due to a sense of accountability for judgements about a specialist topic.

Seagrass is a marine powerhouse, so why isn’t it on the world’s conservation agenda?

By Richard K.F. Unsworth, Swansea University; Jessie Jarvis, University of North Carolina Wilmington; Len McKenzie, James Cook University, and Mike van Keulen, Murdoch University

Seagrass has been around since dinosaurs roamed the earth, it is responsible for keeping the world’s coastlines clean and healthy, and supports many different species of animal, including humans. And yet, it is often overlooked, regarded as merely an innocuous feature of the ocean.

But the fact is that this plant is vital – and it is for that reason that the World Seagrass Association has issued a consensus statement, signed by 115 scientists from 25 countries, stating that these important ecosystems can no longer be ignored on the conservation agenda. Seagrass is part of a marginalised ecosystem that must be increasingly managed, protected and monitored – and needs urgent attention now.

Seagrass meadows are of fundamental importance to human life. They exist on the coastal fringes of almost every continent on earth, where seagrass and its associated biodiversity supports fisheries’ productivity. These flowering plants are the powerhouses of the sea, creating life in otherwise unproductive muddy environments. The meadows they form stabilise sediments, filter vast quantities of nutrients and provide one of the planet’s most efficient oceanic stores of carbon.

But the habitat seagrasses create is suffering due to the impact of humans: poor water quality, coastal development, boating and destructive fishing are all resulting in seagrass loss and degradation. This leads in turn to the loss of most of the fish and invertebrate populations that the meadows support. The green turtle, dugong and species of seahorse, for example, all rely on seagrass for food and shelter, and loss endangers their viability. The plants are important fish nurseries and key fishing grounds. Losing them puts the livelihoods of hundreds of millions of people at risk too, and exposes them to increasing levels of poverty.

How aphasic patients understood the presidential debate

Susie Neilson writes: In The President’s Speech, a 1985 essay by the late neurologist and writer Oliver Sacks, he observes a group of people with aphasia, a language disorder, as they laugh uproariously at the television. The cause of their amusement is an unnamed actor-turned United States president, presumably Ronald Reagan, addressing his audience: “There he was, the old Charmer, the Actor, with his practised rhetoric, his histrionisms, his emotional appeal…The President was, as always, moving—but he was moving them, apparently, mainly to laughter. What could they be thinking? Were they failing to understand him? Or did they, perhaps, understand him all too well?”

Aphasic patients have a heightened ability to interpret body language, tonal quality, and other non-verbal aspects of communication due to a disruption of their speech, writing, reading, or listening abilities. Each aphasic person may have disruptions in any or all of these areas. Usually, the damage comes from a stroke or other head trauma — many people become aphasic in the wake of combat, for example, or after car accidents. “The key,” says Darlene Williamson, a speech pathologist specializing in aphasia and president of the National Aphasia Association, “is intelligence remains intact.”

In this sense, Williamson says, having aphasia is akin to visiting a foreign country, where everyone is communicating in a language you are conversational in at best. “The more impaired your language is,” she says, “the harder you’re working to be sure that you’re comprehending what’s going on.” How do we do this? By paying more careful attention to the cues we can understand, Williamson says. [Continue reading…]

Can great apes read your mind?

By Christopher Krupenye, Max Planck Institute

One of the things that defines humans most is our ability to read others’ minds – that is, to make inferences about what others are thinking. To build or maintain relationships, we offer gifts and services – not arbitrarily, but with the recipient’s desires in mind. When we communicate, we do our best to take into account what our partners already know and to provide information we know will be new and comprehensible. And sometimes we deceive others by making them believe something that is not true, or we help them by correcting such false beliefs.

All these very human behaviors rely on an ability psychologists call theory of mind: We are able to think about others’ thoughts and emotions. We form ideas about what beliefs and feelings are held in the minds of others – and recognize that they can be different from our own. Theory of mind is at the heart of everything social that makes us human. Without it, we’d have a much harder time interpreting – and probably predicting – others’ behavior.

For a long time, many researchers have believed that a major reason human beings alone exhibit unique forms of communication, cooperation and culture is that we’re the only animals to have a complete theory of mind. But is this ability really unique to humans?

In a new study published in Science, my colleagues and I tried to answer this question using a novel approach. Previous work has generally suggested that people think about others’ perspectives in very different ways than other animals do. Our new findings suggest, however, that great apes may actually be a bit more similar to us than we previously thought.

Hints of tool use, culture seen in bumble bees

Science magazine reports: For years, cognitive scientist Lars Chittka felt a bit eclipsed by his colleagues at Queen Mary University of London. Their studies of apes, crows, and parrots were constantly revealing how smart these animals were. He worked on bees, and at the time, almost everyone assumed that the insects acted on instinct, not intelligence. “So there was a challenge for me: Could we get our small-brained bees to solve tasks that would impress a bird cognition researcher?” he recalls. Now, it seems he has succeeded at last.

Chittka’s team has shown that bumble bees can not only learn to pull a string to retrieve a reward, but they can also learn this trick from other bees, even though they have no experience with such a task in nature. The study “successfully challenges the notion that ‘big brains’ are necessary” for new skills to spread, says Christian Rutz, an evolutionary ecologist who studies bird cognition at the University of St. Andrews in the United Kingdom.

Many researchers have used string pulling to assess the smarts of animals, particularly birds and apes. So Chittka and his colleagues set up a low clear plastic table barely tall enough to lay three flat artificial blue flowers underneath. Each flower contained a well of sugar water in the center and had a string attached that extended beyond the table’s boundaries. The only way the bumble bee could get the sugar water was to pull the flower out from under the table by tugging on the string. [Continue reading…]

The world’s most sustainable technology

Stephen E. Nash writes: To my mind, a well-made Acheulean hand ax is one of the most beautiful and remarkable archaeological objects ever found, anywhere on the planet. I love its clean, symmetrical lines. Its strength and heft impress me, and so does its persistence.

Acheulean hand ax is the term archaeologists now use to describe the distinctive stone-tool type first discovered by John Frere at Hoxne, in Suffolk, Great Britain, in the late 1700s. Jacques Boucher de Perthes, a celebrated archaeologist, found similar objects in France during excavations conducted in the 1830s and 1840s. The name Acheulean comes from the site of Saint-Acheul, near the town of Amiens in northern France, which de Perthes excavated in 1859.

The term hand ax is arguably a misnomer. While the stone tool could conceivably have been used to chop — as with a modern ax — there is little evidence the implement was attached to a handle. In the absence of a handle, the user would have seriously damaged his or her hands while holding onto the ax’s sharp edges and striking another hard substance. There is evidence, however — in the form of telltale microscopic damage to the hand-ax edges and surfaces — that these objects were used for slicing, scraping, and some woodworking activities. Hand axes also served as sources of raw material (in strict archaeological terms, they were cores) from which new, smaller cutting tools (flakes) were struck. So they likely served a range of purposes. [Continue reading…]

Study suggests human proclivity for violence gets modulated but is not necessarily diminished by culture

A new study (by José Maria Gómez et al) challenges Steven Pinker’s rosy picture of the state of the world. Science magazine reports: Though group-living primates are relatively violent, the rates vary. Nearly 4.5% of chimpanzee deaths are caused by another chimp, for example, whereas bonobos are responsible for only 0.68% of their compatriots’ deaths. Based on the rates of lethal violence seen in our close relatives, Gómez and his team predicted that 2% of human deaths would be caused by another human.

To see whether that was true, the researchers dove into the scientific literature documenting lethal violence among humans, from prehistory to today. They combined data from archaeological excavations, historical records, modern national statistics, and ethnographies to tally up the number of humans killed by other humans in different time periods and societies. From 50,000 years ago to 10,000 years ago, when humans lived in small groups of hunter-gatherers, the rate of killing was “statistically indistinguishable” from the predicted rate of 2%, based on archaeological evidence, Gómez and his colleagues report today in Nature.

Later, as human groups consolidated into chiefdoms and states, rates of lethal violence shot up — as high as 12% in medieval Eurasia, for example. But in the contemporary era, when industrialized states exert the rule of law, violence is lower than our evolutionary heritage would predict, hovering around 1.3% when combining statistics from across the world. That means evolution “is not a straitjacket,” Gómez says. Culture modulates our bloodthirsty tendencies.

The study is “innovative and meticulously conducted,” says Douglas Fry, an anthropologist at the University of Alabama, Birmingham. The 2% figure is significantly lower than Harvard University psychologist Steven Pinker’s much publicized estimate that 15% of deaths are due to lethal violence among hunter-gatherers. The lower figure resonates with Fry’s extensive studies of nomadic hunter-gatherers, whom he has observed to be less violent than Pinker’s work suggests. “Along with archaeology and nomadic forager research, this [study] shoots holes in the view that the human past and human nature are shockingly violent,” Fry says. [Continue reading…]

Freedom for some requires the enslavement of others

Maya Jasanoff writes: One hundred and fifty years after the Thirteenth Amendment abolished slavery in the United States, the nation’s first black president paid tribute to “a century and a half of freedom—not simply for former slaves, but for all of us.” It sounds innocuous enough till you start listening to the very different kinds of political rhetoric around us. All of us are not free, insists the Black Lives Matter movement, when “the afterlife of slavery” endures in police brutality and mass incarceration. All of us are not free, says the Occupy movement, when student loans impose “debt slavery” on the middle and working classes. All of us are not free, protests the Tea Party, when “slavery” lurks within big government. Social Security? “A form of modern, twenty-first-century slavery,” says Florida congressman Allen West. The national debt? “It’s going to be like slavery when that note is due,” says Sarah Palin. Obamacare? “Worse than slavery,” says Ben Carson. Black, white, left, right—all of us, it seems, can be enslaved now.

Americans learn about slavery as an “original sin” that tempted the better angels of our nation’s egalitarian nature. But “the thing about American slavery,” writes Greg Grandin in his 2014 book The Empire of Necessity, about an uprising on a slave ship off the coast of Chile and the successful effort to end it, is that “it never was just about slavery.” It was about an idea of freedom that depended on owning and protecting personal property. As more and more settlers arrived in the English colonies, the property they owned increasingly took the human form of African slaves. Edmund Morgan captured the paradox in the title of his classic American Slavery, American Freedom: “Freedom for some required the enslavement of others.” When the patriots protested British taxation as a form of “slavery,” they weren’t being hypocrites. They were defending what they believed to be the essence of freedom: the right to preserve their property.

The Empire of Necessity explores “the fullness of the paradox of freedom and slavery” in the America of the early 1800s. Yet to understand the chokehold of slavery on American ideas of freedom, it helps to go back to the beginning. At the time of the Revolution, slavery had been a fixture of the thirteen colonies for as long as the US today has been without it. “Slavery was in England’s American colonies, even its New England colonies, from the very beginning,” explains Princeton historian Wendy Warren in her deeply thoughtful, elegantly written New England Bound, an exploration of captivity in seventeenth-century New England. The Puritan ideal of a “city on a hill,” long held up as a model of America at its communitarian best, actually rested on the backs of “numerous enslaved and colonized people.” [Continue reading…]

Video: Aurora Borealis over Iceland

Time reports: Streetlights across much of Iceland’s capital were switched off Wednesday night so its citizens could make the most of the otherworldly glow of the aurora borealis dancing in the sky above them — without the usual interference from light pollution.

Lights in central Reykjavik and several outlying districts went out for about an hour at 10 p.m. local time, and residents were encouraged to turn off lights at home, the Iceland Monitor reported.

While those in Iceland can expect to see the aurora borealis, also known as the northern lights, at any time from September to May, high solar-particle activity combined with clear skies on Wednesday created unusually perfect conditions. [Continue reading…]

@DSunsets @TheBestSunsets @weather_EU @WeerEnWind @weerwijchen @wx @WX_RT @gjfotos @WeatherNation the ongoing aurora feast by HP Helgason pic.twitter.com/nrQ7YIBnzp

— John Steuten (@SteutenJohn) September 28, 2016