Category Archives: Attention to the Unseen

The problem of translation

Gideon Lewis-Kraus writes: One Enlightenment aspiration that the science-fiction industry has long taken for granted, as a necessary intergalactic conceit, is the universal translator. In a 1967 episode of “Star Trek,” Mr. Spock assembles such a device from spare parts lying around the ship. An elongated chrome cylinder with blinking red-and-green indicator lights, it resembles a retracted light saber; Captain Kirk explains how it works with an off-the-cuff disquisition on the principles of Chomsky’s “universal grammar,” and they walk outside to the desert-island planet of Gamma Canaris N, where they’re being held hostage by an alien. The alien, whom they call The Companion, materializes as a fraction of sparkling cloud. It looks like an orange Christmas tree made of vaporized mortadella. Kirk grips the translator and addresses their kidnapper in a slow, patronizing, put-down-the-gun tone. The all-powerful Companion is astonished.

“My thoughts,” she says with some confusion, “you can hear them.”

The exchange emphasizes the utopian ambition that has long motivated universal translation. The Companion might be an ion fog with coruscating globules of viscera, a cluster of chunky meat-parts suspended in aspic, but once Kirk has established communication, the first thing he does is teach her to understand love. It is a dream that harks back to Genesis, of a common tongue that perfectly maps thought to world. In Scripture, this allowed for a humanity so well coordinated, so alike in its understanding, that all the world’s subcontractors could agree on a time to build a tower to the heavens. Since Babel, though, even the smallest construction projects are plagued by terrible delays. [Continue reading…]

The dysevolution of humanity

Jeff Wheelwright writes: I sat in my padded desk chair, hunched over, alternately entering notes on my computer and reading a book called The Story of the Human Body. It was the sort of book guaranteed to make me increasingly, uncomfortably aware of my own body. I squirmed to relieve an ache in my lower back. When I glanced out the window, the garden looked fuzzy. Where were my glasses? My toes felt hot and itchy: My athlete’s foot was flaring up again.

I returned to the book. “This chapter focuses on just three behaviors … that you are probably doing right now: wearing shoes, reading, and sitting.” OK, I was. What could be more normal?

According to the author, a human evolutionary biologist at Harvard named Daniel Lieberman, shoes, books and padded chairs are not normal at all. My body had good reason to complain because it wasn’t designed for these accessories. Too much sitting caused back pain. Too much focusing on books and computer screens at a young age fostered myopia. Enclosed, cushioned shoes could lead to foot problems, including bunions, fungus between the toes and plantar fasciitis, an inflammation of the tissue below weakened arches.

Those are small potatoes compared with obesity, Type 2 diabetes, osteoporosis, heart disease and many cancers also on the rise in the developed and developing parts of the world. These serious disorders share several characteristics: They’re chronic, noninfectious, aggravated by aging and strongly influenced by affluence and culture. Modern medicine has come up with treatments for them, but not solutions; the deaths and disabilities continue to climb.

liebermanAn evolutionary perspective is critical to understanding the body’s pitfalls in a time of plenty, Lieberman suggests. [Continue reading…]

Why do Americans waste so much food?

Extreme athletes gain control through fear – and sometimes pay the price

By Tim Woodman, Bangor University; Lew Hardy, Bangor University, and Matthew Barlow, Bangor University

The death of famed “daredevil” climber and base jumper Dean Potter has once again raised the idea that all high-risk sportspeople are hedonistic thrill seekers. Our research into extreme athletes shows this view is simplistic and wrong.

It’s about attitudes to risk. In his famous Moon speech in 1962, John F Kennedy said:

Many years ago the great British explorer George Mallory, who was to die on Mount Everest, was asked [by a New York Times journalist] why did he want to climb it. He said, ‘Because it is there.’ Well, space is there, and we’re going to climb it, and the moon and the planets are there, and new hopes for knowledge and peace are there …

Humans have evolved through taking risks. In fact, most human actions can be conceptualised as containing an element of risk: as we take our first step, we risk falling down; as we try a new food, we risk being disgusted; as we ride a bicycle, we risk falling over; as we go on a date, we risk being rejected; and as we travel to the moon, we risk not coming back.

Human endeavour and risk are intertwined. So it is not surprising that despite the increasingly risk-averse society that we live in, many people crave danger and risk – a life less sanitised.

A handful of Bronze-Age men could have fathered two thirds of Europeans

By Daniel Zadik, University of Leicester

For such a large and culturally diverse place, Europe has surprisingly little genetic variety. Learning how and when the modern gene-pool came together has been a long journey. But thanks to new technological advances a picture is slowly coming together of repeated colonisation by peoples from the east with more efficient lifestyles.

In a new study, we have added a piece to the puzzle: the Y chromosomes of the majority of European men can be traced back to just three individuals living between 3,500 and 7,300 years ago. How their lineages came to dominate Europe makes for interesting speculation. One possibility could be that their DNA rode across Europe on a wave of new culture brought by nomadic people from the Steppe known as the Yamnaya.

Stone Age Europe

The first-known people to enter Europe were the Neanderthals – and though they have left some genetic legacy, it is later waves who account for the majority of modern European ancestry. The first “anatomically modern humans” arrived in the continent around 40,000 years ago. These were the Palaeolithic hunter-gatherers sometimes called the Cro-Magnons. They populated Europe quite sparsely and lived a lifestyle not very different from that of the Neanderthals they replaced.

Then something revolutionary happened in the Middle East – farming, which allowed for enormous population growth. We know that from around 8,000 years ago a wave of farming and population growth exploded into both Europe and South Asia. But what has been much less clear is the mechanism of this spread. How much was due to the children of the farmers moving into new territories and how much was due to the neighbouring hunter-gathers adopting this new way of life?

The oldest stone tools yet discovered are unearthed in Kenya

Smithsonian magazine: Approximately 3.3 million years ago someone began chipping away at a rock by the side of a river. Eventually, this chipping formed the rock into a tool used, perhaps, to prepare meat or crack nuts. And this technological feat occurred before humans even showed up on the evolutionary scene.

That’s the conclusion of an analysis published today in Nature of the oldest stone tools yet discovered. Unearthed in a dried-up riverbed in Kenya, the shards of scarred rock, including what appear to be early hammers and cutting instruments, predate the previous record holder by around 700,000 years. Though it’s unclear who made the tools, the find is the latest and most convincing in a string of evidence that toolmaking began before any members of the Homo genus walked the Earth.

“This discovery challenges the idea that the main characters that make us human — making stone tools, eating more meat, maybe using language — all evolved at once in a punctuated way, near the origins of the genus Homo,” says Jason Lewis, a paleoanthropologist at Rutgers University and co-author of the study. [Continue reading…]

Americans should start paying attention to their quality of death

Lauren Alix Brown writes: At the end, they both required antipsychotics. Each had become unrecognizable to their families.

On the day that Sandy Bem, a Cornell psychology professor, 65, was diagnosed with Alzheimer’s, she decided that she would take her own life before the disease obliterated her entirely. As Robin Marantz Henig writes in the New York Times Magazine, Bem said, “I want to live only for as long as I continue to be myself.”

When she was 34, Nicole Teague was diagnosed with metastatic ovarian cancer. Her husband Matthew writes about the ordeal in Esquire: “We don’t tell each other the truth about dying, as a people. Not real dying. Real dying, regular and mundane dying, is so hard and so ugly that it becomes the worst thing of all: It’s grotesque. It’s undignified. No one ever told me the truth about it, not once.”

Matthew tells the truth, and it is horrifying. Over the course of two years, Nicole’s body becomes a rejection of the living. Extensive wounds on her abdomen from surgery expel feces and fistulas filled with food. Matthew spends his days tending to her needs, packing her wounds with ribbon, administering morphine and eventually Dilaudid; at night he goes into a closet, wraps a blanket around his head, stuffs it into a pile of dirty laundry, and screams.

These two stories bring into sharp focus what it looks like when an individual and her family shepherd death, instead of a team of doctors and a hospital. It’s a conversation that is being had more frequently in the US as the baby boomer population ages (pdf) and more Americans face end-of-life choices. As a nation, we are learning — in addition to our quality of life, we should pay attention to the quality of our death. [Continue reading…]

Why the singularity is greatly exaggerated

In 1968, Marvin Minsky said, “Within a generation we will have intelligent computers like HAL in the film, 2001.” What made him and other early AI proponents think machines would think like humans?

Even before Moore’s law there was the idea that computers are going to get faster and their clumsy behavior is going to get a thousand times better. It’s what Ray Kurzweil now claims. He says, “OK, we’re moving up this curve in terms of the number of neurons, number of processing units, so by this projection we’re going to be at super-human levels of intelligence.” But that’s deceptive. It’s a fallacy. Just adding more speed or neurons or processing units doesn’t mean you end up with a smarter or more capable system. What you need are new algorithms, new ways of understanding a problem. In the area of creativity, it’s not at all clear that a faster computer is going to get you there. You’re just going to come up with more bad, bland, boring things. That ability to distinguish, to filter out what’s interesting, that’s still elusive.

Today’s computers, though, can generate an awful lot of connections in split seconds.

But generating is fairly easy and testing pretty hard. In Robert Altman’s movie, The Player, they try to combine two movies to make a better one. You can imagine a computer that just takes all movie titles and tries every combination of pairs, like Reservoir Dogs meets Casablanca. I could write that program right now on my laptop and just let it run. It would instantly generate all possible combinations of movies and there will be some good ones. But recognizing them, that’s the hard part.

That’s the part you need humans for.

Right, the Tim Robbins movie exec character says, “I listen to stories and decide if they’ll make good movies or not.” The great majority of combinations won’t work, but every once in a while there’s one that is both new and interesting. In early AI it seemed like the testing was going to be easy. But we haven’t been able to figure out the filtering.

Can’t you write a creativity algorithm?

If you want to do variations on a theme, like Thomas Kinkade, sure. Take our movie machine. Let’s say there have been 10,000 movies — that’s 10,000 squared, or 100 million combinations of pairs of movies. We can build a classifier that would look at lots of pairs of successful movies and do some kind of inference on it so that it could learn what would be successful again. But it would be looking for patterns that are already existent. It wouldn’t be able to find that new thing that was totally out of left field. That’s what I think of as creativity — somebody comes up with something really new and clever. [Continue reading…]

What rats in a maze can teach us about our sense of direction

By Francis Carpenter, UCL and Caswell Barry, UCL

London’s taxi drivers have to pass an exam in which they are asked to name the shortest route between any two places within six miles of Charing Cross – an area with more than 60,000 roads. We know from brain scans that learning “the knowledge” – as the drivers call it – increases the size of their hippocampi, the part of the brain crucial to spatial memory.

Now, new research suggests that bigger hippocampi may not be the only neurological benefit of driving a black cab. While the average person likely has many separate mental maps for different areas of London, the hours cabbies spend navigating may result in the joining of these maps into a single, global map.

The complexity of science

Leonard Mlodinow writes: The other week I was working in my garage office when my 14-year-old daughter, Olivia, came in to tell me about Charles Darwin. Did I know that he discovered the theory of evolution after studying finches on the Galápagos Islands? I was steeped in what felt like the 37th draft of my new book, which is on the development of scientific ideas, and she was proud to contribute this tidbit of history that she had just learned in class.

Sadly, like many stories of scientific discovery, that commonly recounted tale, repeated in her biology textbook, is not true.

The popular history of science is full of such falsehoods. In the case of evolution, Darwin was a much better geologist than ornithologist, at least in his early years. And while he did notice differences among the birds (and tortoises) on the different islands, he didn’t think them important enough to make a careful analysis. His ideas on evolution did not come from the mythical Galápagos epiphany, but evolved through many years of hard work, long after he had returned from the voyage. (To get an idea of the effort involved in developing his theory, consider this: One byproduct of his research was a 684-page monograph on barnacles.)

The myth of the finches obscures the qualities that were really responsible for Darwin’s success: the grit to formulate his theory and gather evidence for it; the creativity to seek signs of evolution in existing animals, rather than, as others did, in the fossil record; and the open-mindedness to drop his belief in creationism when the evidence against it piled up.

The mythical stories we tell about our heroes are always more romantic and often more palatable than the truth. But in science, at least, they are destructive, in that they promote false conceptions of the evolution of scientific thought. [Continue reading…]

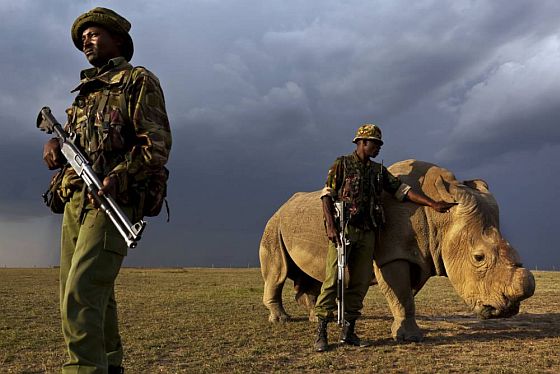

A picture of loneliness: You are looking at the last male northern white rhino

Jonathan Jones writes: What is it like to look at the very last of something? To contemplate the passing of a unique wonder that will soon vanish from the face of the earth? You are seeing it. Sudan is the last male northern white rhino on the planet. If he does not mate successfully soon with one of two female northern white rhinos at Ol Pejeta conservancy, there will be no more of their kind, male or female, born anywhere. And it seems a slim chance, as Sudan is getting old at 42 and breeding efforts have so far failed. Apart from these three animals there are only two other northern white rhinos in the world, both in zoos, both female.

It seems an image of human tenderness that Sudan is lovingly guarded by armed men who stand vigilantly and caringly with him. But of course it is an image of brutality. Even at this last desperate stage in the fate of the northern white rhino, poachers would kill Sudan if they could and hack off his horn to sell it on the Asian medicine market.

Sudan doesn’t know how precious he is. His eye is a sad black dot in his massive wrinkled face as he wanders the reserve with his guards. His head is a marvellous thing. It is a majestic rectangle of strong bone and leathery flesh, a head that expresses pure strength. How terrible that such a mighty head can in reality be so vulnerable. It is lowered melancholically beneath the sinister sky, as if weighed down by fate. This is the noble head of an old warrior, his armour battered, his appetite for struggle fading. [Continue reading…]

The world beyond your head

Matthew Crawford, author of The World Beyond Your Head, talks to Ian Tuttle.

Crawford: Only by excluding all the things that grab at our attention are we able to immerse ourselves in something worthwhile, and vice versa: When you become absorbed in something that is intrinsically interesting, that burden of self-regulation is greatly reduced.

Tuttle: To the present-day consequences. The first, and perhaps most obvious, consequence is a moral one, which you address in your harrowing chapter on machine gambling: “If we have no robust and demanding picture of what a good life would look like, then we are unable to articulate any detailed criticism of the particular sort of falling away from a good life that something like machine gambling represents.” To modern ears that sentence sounds alarmingly paternalistic. Is the notion of “the good life” possible in our age? Or is it fundamentally at odds with our political and/or philosophical commitments?

Crawford: Once you start digging into the chilling details of machine gambling, and of other industries such as mobile gaming apps that emulate the business model of “addiction by design” through behaviorist conditioning, you may indeed start to feel a little paternalistic — if we can grant that it is the role of a pater to make scoundrels feel unwelcome in the town.

According to the prevailing notion, freedom manifests as “preference-satisfying behavior.” About the preferences themselves we are to maintain a principled silence, out of deference to the autonomy of the individual. They are said to express the authentic core of the self, and are for that reason unavailable for rational scrutiny. But this logic would seem to break down when our preferences are the object of massive social engineering, conducted not by government “nudgers” but by those who want to monetize our attention.

My point in that passage is that liberal/libertarian agnosticism about the human good disarms the critical faculties we need even just to see certain developments in the culture and economy. Any substantive notion of what a good life requires will be contestable. But such a contest is ruled out if we dogmatically insist that even to raise questions about the good life is to identify oneself as a would-be theocrat. To Capital, our democratic squeamishness – our egalitarian pride in being “nonjudgmental” — smells like opportunity. Commercial forces step into the void of cultural authority, where liberals and libertarians fear to tread. And so we get a massive expansion of an activity — machine gambling — that leaves people compromised and degraded, as well as broke. And by the way, Vegas is no longer controlled by the mob. It’s gone corporate.

And this gets back to what I was saying earlier, about how our thinking is captured by obsolete polemics from hundreds of years ago. Subjectivism — the idea that what makes something good is how I feel about it — was pushed most aggressively by Thomas Hobbes, as a remedy for civil and religious war: Everyone should chill the hell out. Live and let live. It made sense at the time. This required discrediting all those who claim to know what is best. But Hobbes went further, denying the very possibility of having a better or worse understanding of such things as virtue and vice. In our time, this same posture of value skepticism lays the public square bare to a culture industry that is not at all shy about sculpting souls – through manufactured experiences, engineered to appeal to our most reliable impulses. That’s how one can achieve economies of scale. The result is a massification of the individual. [Continue reading…]

The science of scarcity

Harvard Magazine: Toward the end of World War II, while thousands of Europeans were dying of hunger, 36 men at the University of Minnesota volunteered for a study that would send them to the brink of starvation. Allied troops advancing into German-occupied territories with supplies and food were encountering droves of skeletal people they had no idea how to safely renourish, and researchers at the university had designed a study they hoped might reveal the best methods of doing so. But first, their volunteers had to agree to starve.

The physical toll on these men was alarming: their metabolism slowed by 40 percent; sitting on atrophied muscles became painful; though their limbs were skeletal, their fluid-filled bellies looked curiously stout. But researchers also observed disturbing mental effects they hadn’t expected: obsessions about cookbooks and recipes developed; men with no previous interest in food thought — and talked — about nothing else. Overwhelming, uncontrollable thoughts had taken over, and as one participant later recalled, “Food became the one central and only thing really in one’s life.” There was no room left for anything else.

Though these odd behaviors were just a footnote in the original Minnesota study, to professor of economics Sendhil Mullainathan, who works on contemporary issues of poverty, they were among the most intriguing findings. Nearly 70 years after publication, that “footnote” showed something remarkable: scarcity had stolen more than flesh and muscle. It had captured the starving men’s minds.

Mullainathan is not a psychologist, but he has long been fascinated by how the mind works. As a behavioral economist, he looks at how people’s mental states and social and physical environments affect their economic actions. Research like the Minnesota study raised important questions: What happens to our minds — and our decisions — when we feel we have too little of something? Why, in the face of scarcity, do people so often make seemingly irrational, even counter-productive decisions? And if this is true in large populations, why do so few policies and programs take it into account?

In 2008, Mullainathan joined Eldar Shafir, Tod professor of psychology and public affairs at Princeton, to write a book exploring these questions. Scarcity: Why Having Too Little Means So Much (2013) presented years of findings from the fields of psychology and economics, as well as new empirical research of their own. Based on their analysis of the data, they sought to show that, just as food had possessed the minds of the starving volunteers in Minnesota, scarcity steals mental capacity wherever it occurs—from the hungry, to the lonely, to the time-strapped, to the poor.

That’s a phenomenon well-documented by psychologists: if the mind is focused on one thing, other abilities and skills — attention, self-control, and long-term planning — often suffer. Like a computer running multiple programs, Mullainathan and Shafir explain, our mental processors begin to slow down. We don’t lose any inherent capacities, just the ability to access the full complement ordinarily available for use.

But what’s most striking — and in some circles, controversial — about their work is not what they reveal about the effects of scarcity. It’s their assertion that scarcity affects anyone in its grip. Their argument: qualities often considered part of someone’s basic character — impulsive behavior, poor performance in school, poor financial decisions — may in fact be the products of a pervasive feeling of scarcity. And when that feeling is constant, as it is for people mired in poverty, it captures and compromises the mind.

This is one of scarcity’s most insidious effects, they argue: creating mindsets that rarely consider long-term best interests. “To put it bluntly,” says Mullainathan, “if I made you poor tomorrow, you’d probably start behaving in many of the same ways we associate with poor people.” And just like many poor people, he adds, you’d likely get stuck in the scarcity trap. [Continue reading…]

The rhythm of consciousness

Gregory Hickok writes: In 1890, the American psychologist William James famously likened our conscious experience to the flow of a stream. “A ‘river’ or a ‘stream’ are the metaphors by which it is most naturally described,” he wrote. “In talking of it hereafter, let’s call it the stream of thought, consciousness, or subjective life.”

While there is no disputing the aptness of this metaphor in capturing our subjective experience of the world, recent research has shown that the “stream” of consciousness is, in fact, an illusion. We actually perceive the world in rhythmic pulses rather than as a continuous flow.

Some of the first hints of this new understanding came as early as the 1920s, when physiologists discovered brain waves: rhythmic electrical currents measurable on the surface of the scalp by means of electroencephalography. Subsequent research cataloged a spectrum of such rhythms (alpha waves, delta waves and so on) that correlated with various mental states, such as calm alertness and deep sleep.

Researchers also found that the properties of these rhythms varied with perceptual or cognitive events. The phase and amplitude of your brain waves, for example, might change if you saw or heard something, or if you increased your concentration on something, or if you shifted your attention.

But those early discoveries themselves did not change scientific thinking about the stream-like nature of conscious perception. Instead, brain waves were largely viewed as a tool for indexing mental experience, much like the waves that a ship generates in the water can be used to index the ship’s size and motion (e.g., the bigger the waves, the bigger the ship).

Recently, however, scientists have flipped this thinking on its head. We are exploring the possibility that brain rhythms are not merely a reflection of mental activity but a cause of it, helping shape perception, movement, memory and even consciousness itself. [Continue reading…]

Where are all the aliens?

A Norwegian campaign to legitimize the use of psychedelics

The New York Times reports: In a country so wary of drug abuse that it limits the sale of aspirin, Pal-Orjan Johansen, a Norwegian researcher, is pushing what would seem a doomed cause: the rehabilitation of LSD.

It matters little to him that the psychedelic drug has been banned here and around the world for more than 40 years. Mr. Johansen pitches his effort not as a throwback to the hippie hedonism of the 1960s, but as a battle for human rights and good health.

In fact, he also wants to manufacture MDMA and psilocybin, the active ingredients in two other prohibited substances, Ecstasy and so-called magic mushrooms.

All of that might seem quixotic at best, if only Mr. Johansen and EmmaSofia, the psychedelics advocacy group he founded with his American-born wife and fellow scientist, Teri Krebs, had not already won some unlikely supporters, including a retired Norwegian Supreme Court judge who serves as their legal adviser.

The group, whose name derives from street slang for MDMA and the Greek word for wisdom, stands in the vanguard of a global movement now pushing to revise drug policies set in the 1970s. That it has gained traction in a country so committed to controlling drug use shows how much old orthodoxies have crumbled. [Continue reading…]

Chain reactions spreading ideas through science and culture

David Krakauer writes: On Dec. 2, 1942, just over three years into World War II, President Roosevelt was sent the following enigmatic cable: “The Italian navigator has landed in the new world.” The accomplishments of Christopher Columbus had long since ceased to be newsworthy. The progress of the Italian physicist, Enrico Fermi, navigator across the territories of Lilliputian matter — the abode of the microcosm of the atom — was another thing entirely. Fermi’s New World, discovered beneath a Midwestern football field in Chicago, was the province of newly synthesized radioactive elements. And Fermi’s landing marked the earliest sustained and controlled nuclear chain reaction required for the construction of an atomic bomb.

This physical chain reaction was one of the links of scientific and cultural chain reactions initiated by the Hungarian physicist, Leó Szilárd. The first was in 1933, when Szilárd proposed the idea of a neutron chain reaction. Another was in 1939, when Szilárd and Einstein sent the now famous “Szilárd-Einstein” letter to Franklin D. Roosevelt informing him of the destructive potential of atomic chain reactions: “This new phenomenon would also lead to the construction of bombs, and it is conceivable — though much less certain — that extremely powerful bombs of a new type may thus be constructed.”

This scientific information in turn generated political and policy chain reactions: Roosevelt created the Advisory Committee on Uranium which led in yearly increments to the National Defense Research Committee, the Office of Scientific Research and Development, and finally, the Manhattan Project.

Life itself is a chain reaction. Consider a cell that divides into two cells and then four and then eight great-granddaughter cells. Infectious diseases are chain reactions. Consider a contagious virus that infects one host that infects two or more susceptible hosts, in turn infecting further hosts. News is a chain reaction. Consider a report spread from one individual to another, who in turn spreads the message to their friends and then on to the friends of friends.

These numerous connections that fasten together events are like expertly arranged dominoes of matter, life, and culture. As the modernist designer Charles Eames would have it, “Eventually everything connects — people, ideas, objects. The quality of the connections is the key to quality per se.”

Dominoes, atoms, life, infection, and news — all yield domino effects that require a sensitive combination of distances between pieces, physics of contact, and timing. When any one of these ingredients is off-kilter, the propagating cascade is likely to come to a halt. Premature termination is exactly what we might want to happen to a deadly infection, but it is the last thing that we want to impede an idea. [Continue reading…]