Category Archives: Science/Technology

Thousands of Einstein documents now accessible online

The New York Times reports: They have been called the Dead Sea Scrolls of physics. Since 1986, the Princeton University Press and the Hebrew University of Jerusalem, to whom Albert Einstein bequeathed his copyright, have been engaged in a mammoth effort to study some 80,000 documents he left behind.

Starting on Friday, when Digital Einstein is introduced, anyone with an Internet connection will be able to share in the letters, papers, postcards, notebooks and diaries that Einstein left scattered in Princeton and in other archives, attics and shoeboxes around the world when he died in 1955.

The Einstein Papers Project, currently edited by Diana Kormos-Buchwald, a professor of physics and the history of science at the California Institute of Technology, has already published 13 volumes in print out of a projected 30. [Continue reading…]

What is it like to be a bee?

In the minds of many humans, empathy is the signature of humanity and yet if this empathy extends further and includes non-humans we may be suspected of indulging in anthropomorphism — a sentimental projection of our own feelings into places where similar feelings supposedly cannot exist.

But the concept of anthropomorphism is itself a strange idea since it seems to invalidate what should be one of the most basic assumptions we can reasonably make about living creatures: that without the capacity to suffer, nothing would survive.

Just as the deadening of sensation makes people more susceptible to injury, an inability to feel pain would impede any creature’s need to avoid harm.

The seemingly suicidal draw of the moth to a flame is the exception rather than the rule. Moreover the insect is driven by a mistake, not a death wish. It is drawn towards the light, not the heat, oblivious that the two are one.

If humans indulge in projections about the feelings of others — human and non-human — perhaps we more commonly engage in negative projections: choosing to assume that feelings are absent where it would cause us discomfort to be attuned to their presence.

Our inclination is to avoid feeling too much and thus we construct neat enclosures for our concerns.

These enclosures shut out the feelings of strangers and then by extension seal away boundless life from which we have become even more estranged.

Heather Swan writes: It was a warm day in early spring when I had my first long conversation with the entomologist and science studies scholar Sainath Suryanarayanan. We met over a couple of hives I had recently inherited. One was thriving. Piles of dead bees filled the other. Parts of the comb were covered with mould and oozing something that looked like molasses.

Having recently attended a class for hobby beekeepers with Marla Spivak, an entomologist at the University of Minnesota, I was aware of the many different diseases to which bees are susceptible. American foulbrood, which was a mean one, concerned me most. Beekeepers recommended burning all of your equipment if you discovered it in your hives. Some of these bees were alive, but obviously in low spirits, and I didn’t want to destroy them unnecessarily. I called Sainath because I thought he could help me with the diagnosis.

Beekeeping, these days, is riddled with risks. New viruses, habitat loss, pesticides and mites all contribute to creating a deadly labyrinth through which nearly every bee must travel. Additionally, in 2004, mysterious bee disappearances began to plague thousands of beekeepers. Seemingly healthy bees started abandoning their homes. This strange disappearing act became known as colony collapse disorder (CCD).

Since then, the world has seen the decline of many other pollinating species, too. Because honeybees and other pollinators are responsible for pollinating at least one-third of all the food we eat, this is a serious problem globally. Diagnosing bee problems is not simple, but some answers are emerging. A ubiquitous class of pesticides called neonicotinoids have been implicated in pollinator decline, which has fuelled conversations among beekeepers, scientists, policy-makers and growers. A beekeeper facing a failing hive now has to consider not only the health of the hive itself, but also the health of the landscape around the hive. Dead bees lead beekeepers down a path of many questions. And some beekeepers have lost so many hives, they feel like giving up.

When we met at my troubled hives, Sainath brought his own hive tool and veil. He had already been down a path of many questions about bee deaths, one that started in his youth with a fascination for observing insects. When he was 14, he began his ‘Amateur Entomologist’s Record’, where he kept taxonomic notes on such things as wing textures, body shapes, colour patterns and behaviours. But the young scientist’s approach occasionally slipped to include his exuberance, describing one moment as ‘a stupefying experience!’ All this led him to study biology and chemistry in college, then to work on the behavioural ecology of paper wasps during his doctoral studies, and eventually to Minnesota to help Spivak investigate the role of pesticides in CCD.

Sainath had spent several years doing lab and field experiments with wasps and bees, but ultimately wanted to shift from traditional practices in entomology to research that included human/insect relationships. It was Sainath who made me wonder about the role of emotion in science – both in the scientists themselves and in the subjects of their experiments. I had always thought of emotion as something excised from science, but this was impossible for some scientists. What was the role of empathy in experimentation? How do we, with our human limitations, understand something as radically different from us as the honeybee? Did bees have feelings, too? If so, what did that mean for the scientist? For the science? [Continue reading…]

Researchers announce major advance in image-recognition software

The New York Times reports: Two groups of scientists, working independently, have created artificial intelligence software capable of recognizing and describing the content of photographs and videos with far greater accuracy than ever before, sometimes even mimicking human levels of understanding.

Until now, so-called computer vision has largely been limited to recognizing individual objects. The new software, described on Monday by researchers at Google and at Stanford University, teaches itself to identify entire scenes: a group of young men playing Frisbee, for example, or a herd of elephants marching on a grassy plain.

The software then writes a caption in English describing the picture. Compared with human observations, the researchers found, the computer-written descriptions are surprisingly accurate.

The advances may make it possible to better catalog and search for the billions of images and hours of video available online, which are often poorly described and archived. At the moment, search engines like Google rely largely on written language accompanying an image or video to ascertain what it contains. [Continue reading…]

Hydrogen cars about to go on sale. Their only emission: water

The New York Times reports: Remember the hydrogen car?

A decade ago, President George W. Bush espoused the environmental promise of cars running on hydrogen, the universe’s most abundant element. “The first car driven by a child born today,” he said in his 2003 State of the Union speech, “could be powered by hydrogen, and pollution-free.”

That changed under Steven Chu, the Nobel Prize-winning physicist who was President Obama’s first Secretary of Energy. “We asked ourselves, ‘Is it likely in the next 10 or 15, 20 years that we will convert to a hydrogen-car economy?’” Dr. Chu said then. “The answer, we felt, was ‘no.’ ” The administration slashed funding for hydrogen fuel cell research.

Attention shifted to battery electric vehicles, particularly those made by the headline-grabbing Tesla Motors.

The hydrogen car, it appeared, had died. And many did not mourn its passing, particularly those who regarded the auto companies’ interest in hydrogen technology as a stunt to signal that they cared about the environment while selling millions of highly profitable gas guzzlers.

Except the companies, including General Motors, Honda, Toyota, Daimler and Hyundai, persisted.

After many years and billions of dollars of research and development, hydrogen cars are headed to the showrooms. [Continue reading…]

Wonder and the ends of inquiry

Lorraine Daston writes: Science and wonder have a long and ambivalent relationship. Wonder is a spur to scientific inquiry but also a reproach and even an inhibition to inquiry. As philosophers never tire of repeating, only those ignorant of the causes of things wonder: the solar eclipse that terrifies illiterate peasants is no wonder to the learned astronomer who can explain and predict it. Romantic poets accused science of not just neutralizing wonder but of actually killing it. Modern popularizations of science make much of wonder — but expressions of that passion are notably absent in professional publications. This love-hate relationship between wonder and science started with science itself.

Wonder always comes at the beginning of inquiry. “For it is owing to their wonder that men both now begin and at first began to philosophize,” explains Aristotle; Descartes made wonder “the first of the passions,” and the only one without a contrary, opposing passion. In these and many other accounts of wonder, both soul and senses are ambushed by a puzzle or a surprise, something that catches us unawares and unprepared. Wonder widens the eyes, opens the mouth, stops the heart, freezes thought. Above all, at least in classical accounts like those of Aristotle and Descartes, wonder both diagnoses and cures ignorance. It reveals that there are more things in heaven and earth than have been dreamt of in our philosophy; ideally, it also spurs us on to find an explanation for the marvel.

Therein lies the paradox of wonder: it is the beginning of inquiry (Descartes remarks that people deficient in wonder “are ordinarily quite ignorant”), but the end of inquiry also puts an end to wonder. [Continue reading…]

Slaves of productivity

Quinn Norton writes: We dream now of making Every Moment Count, of achieving flow and never leaving, creating one project that must be better than the last, of working harder and smarter. We multitask, we update, and we conflate status with long hours worked in no paid overtime systems for the nebulous and fantastic status of being Too Important to have Time to Ourselves, time to waste. But this incarnation of the American dream is all about doing, and nothing about doing anything good, or even thinking about what one was doing beyond how to do more of it more efficiently. It was not even the surrenders to hedonism and debauchery or greed our literary dreams have recorded before. It is a surrender to nothing, to a nothingness of lived accounting.

This moment’s goal of productivity, with its all-consuming practice and unattainable horizon, is perfect for our current corporate world. Productivity never asks what it builds, just how much of it can be piled up before we leave or die. It is irrelevant to pleasure. It’s agnostic about the fate of humanity. It’s not even selfish, because production negates the self. Self can only be a denominator, holding up a dividing bar like a caryatid trying to hold up a stone roof.

I am sure this started with the Industrial Revolution, but what has swept through this generation is more recent. This idea of productivity started in the 1980s, with the lionizing of the hardworking greedy. There’s a critique of late capitalism to be had for sure, but what really devastated my generation was the spiritual malaise inherent in Taylorism’s perfectly mechanized human labor. But Taylor had never seen a robot or a computer perfect his methods of being human. By the 1980s, we had. In the age of robots we reinvented the idea of being robots ourselves. We wanted to program our minds and bodies and have them obey clocks and routines. In this age of the human robot, of the materialist mind, being efficient took the pre-eminent spot, beyond goodness or power or wisdom or even cruel greed. [Continue reading…]

Getting beyond debates over science

Paul Voosen writes: Last year, as the summer heat broke, a congregation of climate scientists and communicators gathered at the headquarters of the American Association for the Advancement of Science, a granite edifice erected in the heart of Washington, to wail over their collective futility.

Year by year, the evidence for human-caused global warming has grown more robust. Greenhouse gases load the air and sea. Temperatures rise. Downpours strengthen. Ice melts. Yet the American public seems, from cursory glances at headlines and polls, more divided than ever on the basic existence of climate change, in spite of scientists’ many, many warnings. Their message, the attendees fretted, simply wasn’t getting through.

This worry wasn’t just about climate change, but also stem cells. Genetically modified food. Vaccines. Nuclear power. And, of course, evolution: Challenging scientific reality seems to be an increasingly common feature of American life. Some researchers have gone so far as to accuse one political party, the Republicans, of making “science denial” a bedrock principle. The authority attributed to scientists for a century is crumbling.

It is a disturbing story. It is also, in many ways, a fairy tale. So says Dan M. Kahan, a law professor at Yale University who, over the past decade, has run an insurgent research campaign into how the public understands science. Through a magpie synthesis of psychology, risk perception, anthropology, political science, and communication research, leavened with heavy doses of empiricism and idol bashing, he has exposed the tribal biases that mediate our encounters with scientific knowledge. It’s a dynamic he calls cultural cognition. [Continue reading…]

The faster we go, the more time we lose

Mark C. Taylor writes: “Sleeker. Faster. More Intuitive” (The New York Times); “Welcome to a world where speed is everything” (Verizon FiOS); “Speed is God, and time is the devil” (chief of Hitachi’s portable-computer division). In “real” time, life speeds up until time itself seems to disappear—fast is never fast enough, everything has to be done now, instantly. To pause, delay, stop, slow down is to miss an opportunity and to give an edge to a competitor. Speed has become the measure of success—faster chips, faster computers, faster networks, faster connectivity, faster news, faster communications, faster transactions, faster deals, faster delivery, faster product cycles, faster brains, faster kids. Why are we so obsessed with speed, and why can’t we break its spell?

The cult of speed is a modern phenomenon. In “The Futurist Manifesto” in 1909, Filippo Tommaso Marionetti declared, “We say that the splendor of the world has been enriched by a new beauty: the beauty of speed.” The worship of speed reflected and promoted a profound shift in cultural values that occurred with the advent of modernity and modernization. With the emergence of industrial capitalism, the primary values governing life became work, efficiency, utility, productivity, and competition. When Frederick Winslow Taylor took his stopwatch to the factory floor in the early 20th century to increase workers’ efficiency, he began a high-speed culture of surveillance so memorably depicted in Charlie Chaplin’s Modern Times. Then, as now, efficiency was measured by the maximization of rapid production through the programming of human behavior.

With the transition from mechanical to electronic technologies, speed increased significantly. The invention of the telegraph, telephone, and stock ticker liberated communication from the strictures imposed by the physical means of conveyance. Previously, messages could be sent no faster than people, horses, trains, or ships could move. By contrast, immaterial words, sounds, information, and images could be transmitted across great distances at very high speed. During the latter half of the 19th century, railway and shipping companies established transportation networks that became the backbone of national and international information networks. When the trans-Atlantic cable (1858) and transcontinental railroad (1869) were completed, the foundation for the physical infrastructure of today’s digital networks was in place.

Fast-forward 100 years. During the latter half of the 20th century, information, communications, and networking technologies expanded rapidly, and transmission speed increased exponentially. But more than data and information were moving faster. Moore’s Law, according to which the speed of computer chips doubles every two years, now seems to apply to life itself. Plugged in 24/7/365, we are constantly struggling to keep up but are always falling further behind. The faster we go, the less time we seem to have. As our lives speed up, stress increases, and anxiety trickles down from managers to workers, and parents to children. [Continue reading…]

Beyond the Bell Curve, a new universal law

Natalie Wolchover writes: Imagine an archipelago where each island hosts a single tortoise species and all the islands are connected — say by rafts of flotsam. As the tortoises interact by dipping into one another’s food supplies, their populations fluctuate.

In 1972, the biologist Robert May devised a simple mathematical model that worked much like the archipelago. He wanted to figure out whether a complex ecosystem can ever be stable or whether interactions between species inevitably lead some to wipe out others. By indexing chance interactions between species as random numbers in a matrix, he calculated the critical “interaction strength” — a measure of the number of flotsam rafts, for example — needed to destabilize the ecosystem. Below this critical point, all species maintained steady populations. Above it, the populations shot toward zero or infinity.

Little did May know, the tipping point he discovered was one of the first glimpses of a curiously pervasive statistical law.

The law appeared in full form two decades later, when the mathematicians Craig Tracy and Harold Widom proved that the critical point in the kind of model May used was the peak of a statistical distribution. Then, in 1999, Jinho Baik, Percy Deift and Kurt Johansson discovered that the same statistical distribution also describes variations in sequences of shuffled integers — a completely unrelated mathematical abstraction. Soon the distribution appeared in models of the wriggling perimeter of a bacterial colony and other kinds of random growth. Before long, it was showing up all over physics and mathematics.

“The big question was why,” said Satya Majumdar, a statistical physicist at the University of Paris-Sud. “Why does it pop up everywhere?” [Continue reading…]

When digital nature replaces nature

Diane Ackerman writes: Last summer, I watched as a small screen in a department store window ran a video of surfing in California. That simple display mesmerized high-heeled, pin-striped, well-coiffed passersby who couldn’t take their eyes off the undulating ocean and curling waves that dwarfed the human riders. Just as our ancient ancestors drew animals on cave walls and carved animals from wood and bone, we decorate our homes with animal prints and motifs, give our children stuffed animals to clutch, cartoon animals to watch, animal stories to read. Our lives trumpet, stomp, and purr with animal tales, such as The Bat Poet, The Velveteen Rabbit, Aesop’s Fables, The Wind in the Willows, The Runaway Bunny, and Charlotte’s Web. I first read these wondrous books as a grown-up, when both the adult and the kid in me were completely spellbound. We call each other by “pet” names, wear animal-print clothes. We ogle plants and animals up close on screens of one sort or another. We may not worship or hunt the animals we see, but we still regard them as necessary physical and spiritual companions. It seems the more we exile ourselves from nature, the more we crave its miracle waters. Yet technological nature can’t completely satisfy that ancient yearning.

What if, through novelty and convenience, digital nature replaces biological nature? Gradually, we may grow used to shallower and shallower experiences of nature. Studies show that we’ll suffer. Richard Louv writes of widespread “nature deficit disorder” among children who mainly play indoors — an oddity quite new in the history of humankind. He documents an upswell in attention disorders, obesity, depression, and lack of creativity. A San Diego fourth-grader once told him: “I like to play indoors because that’s where all the electrical outlets are.” Adults suffer equally. It’s telling that hospital patients with a view of trees heal faster than those gazing at city buildings and parking lots. In studies conducted by Peter H. Kahn and his colleagues at the University of Washington, office workers in windowless cubicles were given flat-screen views of nature. They reaped the benefits of greater health, happiness, and efficiency than those without virtual windows. But they weren’t as happy, healthy, or creative as people given real windows with real views of nature.

As a species, we’ve somehow survived large and small ice ages, genetic bottlenecks, plagues, world wars, and all manner of natural disasters, but I sometimes wonder if we’ll survive our own ingenuity. At first glance, it seems like we may be living in sensory overload. The new technology, for all its boons, also bedevils us with speed demons, alluring distractors, menacing highjinks, cyber-bullies, thought-nabbers, calm-frayers, and a spiky wad of miscellaneous news. Some days it feels like we’re drowning in a twittering bog of information. But, at exactly the same time, we’re living in sensory poverty, learning about the world without experiencing it up close, right here, right now, in all its messy, majestic, riotous detail. Like seeing icebergs without the cold, without squinting in the Antarctic glare, without the bracing breaths of dry air, without hearing the chorus of lapping waves and shrieking gulls. We lose the salty smell of the cold sea, the burning touch of ice. If, reading this, you can taste those sensory details in your mind, is that because you’ve experienced them in some form before, as actual experience? If younger people never experience them, can they respond to words on the page in the same way?

The farther we distance ourselves from the spell of the present, explored by all our senses, the harder it will be to understand and protect nature’s precarious balance, let alone the balance of our own human nature. [Continue reading…]

Scientists got it wrong on gravitational waves. So what?

Philip Ball writes: It was announced in headlines worldwide as one of the biggest scientific discoveries for decades, sure to garner Nobel prizes. But now it looks likely that the alleged evidence of both gravitational waves and ultra-fast expansion of the universe in the big bang (called inflation) has literally turned to dust.

Last March, a team using a telescope called Bicep2 at the South Pole claimed to have read the signatures of these two elusive phenomena in the twisting patterns of the cosmic microwave background radiation: the afterglow of the big bang. But this week, results from an international consortium using a space telescope called Planck show that Bicep2’s data is likely to have come not from the microwave background but from dust scattered through our own galaxy.

Some will regard this as a huge embarrassment, not only for the Bicep2 team but for science itself. Already some researchers have criticised the team for making a premature announcement to the press before their work had been properly peer reviewed.

But there’s no shame here. On the contrary, this episode is good for science. [Continue reading…]

Brain shrinkage, poor concentration, anxiety, and depression linked to media-multitasking

Simultaneously using mobile phones, laptops and other media devices could be changing the structure of our brains, according to new University of Sussex research.

A study published today (24 September) in PLOS ONE reveals that people who frequently use several media devices at the same time have lower grey-matter density in one particular region of the brain compared to those who use just one device occasionally.

The research supports earlier studies showing connections between high media-multitasking activity and poor attention in the face of distractions, along with emotional problems such as depression and anxiety.

But neuroscientists Kep Kee Loh and Dr Ryota Kanai point out that their study reveals a link rather than causality and that a long-term study needs to be carried out to understand whether high concurrent media usage leads to changes in the brain structure, or whether those with less-dense grey matter are more attracted to media multitasking. [Continue reading…]

Earth’s magnetic field polarity could flip sooner than expected

Scientific American reports: Earth’s magnetic field, which protects the planet from huge blasts of deadly solar radiation, has been weakening over the past six months, according to data collected by a European Space Agency (ESA) satellite array called Swarm.

The biggest weak spots in the magnetic field — which extends 370,000 miles (600,000 kilometers) above the planet’s surface — have sprung up over the Western Hemisphere, while the field has strengthened over areas like the southern Indian Ocean, according to the magnetometers onboard the Swarm satellites — three separate satellites floating in tandem.

The scientists who conducted the study are still unsure why the magnetic field is weakening, but one likely reason is that Earth’s magnetic poles are getting ready to flip, said Rune Floberghagen, the ESA’s Swarm mission manager. In fact, the data suggest magnetic north is moving toward Siberia.

“Such a flip is not instantaneous, but would take many hundred if not a few thousand years,” Floberghagen told Live Science. “They have happened many times in the past.”

Scientists already know that magnetic north shifts. Once every few hundred thousand years the magnetic poles flip so that a compass would point south instead of north. While changes in magnetic field strength are part of this normal flipping cycle, data from Swarm have shown the field is starting to weaken faster than in the past. Previously, researchers estimated the field was weakening about 5 percent per century, but the new data revealed the field is actually weakening at 5 percent per decade, or 10 times faster than thought. As such, rather than the full flip occurring in about 2,000 years, as was predicted, the new data suggest it could happen sooner. [Continue reading…]

In a grain, a glimpse of the cosmos

Natalie Wolchover writes: One January afternoon five years ago, Princeton geologist Lincoln Hollister opened an email from a colleague he’d never met bearing the subject line, “Help! Help! Help!” Paul Steinhardt, a theoretical physicist and the director of Princeton’s Center for Theoretical Science, wrote that he had an extraordinary rock on his hands, one that he thought was natural but whose origin and formation he could not identify. Hollister had examined tons of obscure rocks over his five-decade career and agreed to take a look.

Originally a dense grain two or three millimeters across that had been ground down into microscopic fragments, the rock was a mishmash of lustrous metal and matte mineral of a yellowish hue. It reminded Hollister of something from Oregon called josephinite. He told Steinhardt that such rocks typically form deep underground at the boundary between Earth’s core and mantle or near the surface due to a particular weathering phenomenon. “Of course, all of that ended up being a false path,” said Hollister, 75. The more the scientists studied the rock, the stranger it seemed.

After five years, approximately 5,000 Steinhardt-Hollister emails and a treacherous journey to the barren arctic tundra of northeastern Russia, the mystery has only deepened. Today, Steinhardt, Hollister and 15 collaborators reported the curious results of a long and improbable detective story. Their findings, detailed in the journal Nature Communications, reveal new aspects of the solar system as it was 4.5 billion years ago: chunks of incongruous metal inexplicably orbiting the newborn sun, a collision of extraordinary magnitude, and the creation of new minerals, including an entire class of matter never before seen in nature. It’s a drama etched in the geochemistry of a truly singular rock. [Continue reading…]

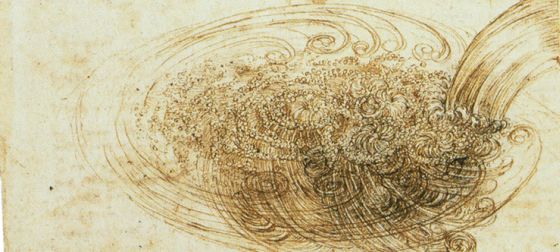

To understand turbulence we need the intuitive perspective of art

Philip Ball writes: When the German physicist Arnold Sommerfeld assigned his most brilliant student a subject for his doctoral thesis in 1923, he admitted that “I would not have proposed a topic of this difficulty to any of my other pupils.” Those others included such geniuses as Wolfgang Pauli and Hans Bethe, yet for Sommerfeld the only one who was up to the challenge of this subject was Werner Heisenberg.

Heisenberg went on to be a key founder of quantum theory and was awarded the 1932 Nobel Prize in physics. He developed one of the first mathematical descriptions of this new and revolutionary discipline, discovered the uncertainty principle, and together with Niels Bohr engineered the “Copenhagen interpretation” of quantum theory, to which many physicists still adhere today.

The subject of Heisenberg’s doctoral dissertation, however, wasn’t quantum physics. It was harder than that. The 59-page calculation that he submitted to the faculty of the University of Munich in 1923 was titled “On the stability and turbulence of fluid flow.”

Sommerfeld had been contacted by the Isar Company of Munich, which was contracted to prevent the Isar River from flooding by building up its banks. The company wanted to know at what point the river flow changed from being smooth (the technical term is “laminar”) to being turbulent, beset with eddies. That question requires some understanding of what turbulence is. Heisenberg’s work on the problem was impressive—he solved the mathematical equations of flow at the point of the laminar-to-turbulent change—and it stimulated ideas for decades afterward. But he didn’t really crack it—he couldn’t construct a comprehensive theory of turbulence.

Heisenberg was not given to modesty, but it seems he had no illusions about his achievements here. One popular story goes that he once said, “When I meet God, I am going to ask him two questions. Why relativity? And why turbulence? I really believe he will have an answer for the first.”

It is probably an apocryphal tale. The same remark has been attributed to at least one other person: The British mathematician and expert on fluid flow, Horace Lamb, is said to have hoped that God might enlighten him on quantum electrodynamics and turbulence, saying that “about the former I am rather optimistic.”

You get the point: turbulence, a ubiquitous and eminently practical problem in the real world, is frighteningly hard to understand. [Continue reading…]

No, a ‘supercomputer’ did NOT pass the Turing Test for the first time and everyone should know better

Follow numerous “reports” (i.e. numerous regurgitations of a press release from Reading University) on an “historic milestone in artificial intelligence” having been passed “for the very first time by supercomputer Eugene Goostman” at an event organized by Professor Kevin Warwick, Mike Masnick writes:

If you’ve spent any time at all in the tech world, you should automatically have red flags raised around that name. Warwick is somewhat infamous for his ridiculous claims to the press, which gullible reporters repeat without question. He’s been doing it for decades. All the way back in 2000, we were writing about all the ridiculous press he got for claiming to be the world’s first “cyborg” for implanting a chip in his arm. There was even a — since taken down — Kevin Warwick Watch website that mocked and categorized all of his media appearances in which gullible reporters simply repeated all of his nutty claims. Warwick had gone quiet for a while, but back in 2010, we wrote about how his lab was getting bogus press for claiming to have “the first human infected with a computer virus.” The Register has rightly referred to Warwick as both “Captain Cyborg” and a “media strumpet” and have long been chronicling his escapades in exaggerating bogus stories about the intersection of humans and computers for many, many years.

Basically, any reporter should view extraordinary claims associated with Warwick with extreme caution. But that’s not what happened at all. Instead, as is all too typical with Warwick claims, the press went nutty over it, including publications that should know better.

Anyone can try having a “conversation” with Eugene Goostman.

If the strings of words it spits out give you the impression you’re talking to a human being, that’s probably an indication that you don’t spend enough time talking to human beings.

Physicists report finding reliable way to teleport data

The New York Times reports: Scientists in the Netherlands have moved a step closer to overriding one of Albert Einstein’s most famous objections to the implications of quantum mechanics, which he described as “spooky action at a distance.”

In a paper published on Thursday in the journal Science, physicists at the Kavli Institute of Nanoscience at the Delft University of Technology reported that they were able to reliably teleport information between two quantum bits separated by three meters, or about 10 feet.

Quantum teleportation is not the “Star Trek”-style movement of people or things; rather, it involves transferring so-called quantum information — in this case what is known as the spin state of an electron — from one place to another without moving the physical matter to which the information is attached.

Classical bits, the basic units of information in computing, can have only one of two values — either 0 or 1. But quantum bits, or qubits, can simultaneously describe many values. They hold out both the possibility of a new generation of faster computing systems and the ability to create completely secure communication networks.

Moreover, the scientists are now closer to definitively proving Einstein wrong in his early disbelief in the notion of entanglement, in which particles separated by light-years can still appear to remain connected, with the state of one particle instantaneously affecting the state of another. [Continue reading…]